Tetra File-Log Agent

The Tetra File-Log Agent is a high speed, instrument-agnostic agent that detects the changes of file-based outputs generated from instruments. It requires either a Tetra Generic Data Connector (GDC) installed on a Data Hub or a Cloud Data Connector (CDC) for file uploads.

Tetra File-Log Agent Features

NOTE:

The File-Log Agent LogWatcher service was removed in version 4.1.0 of the software.

These are the main features of the Tetra File-Log Agent:

- Supports two services LogWatcher service and FileWatcher service, which can upload the new content incrementally or the entire file/folder, respectively.

- Monitors the outputs from multiple folder paths

- Monitors local and network drives

- Supports glob patterns to select folders or files

- Applies

Least Privilege Accessusing Service Account withoutLocal Logonpermission - Customizes time intervals to detect the changes

- Specifies the Start Date to select the files/folders

- Supports large file (up to 2 TB) upload using the S3 Direct Upload option (feature for v3.0.0 and later)

- Runs automatically in the background without user interaction

- Auto-starts when the host machine is started

- Can auto-restart up to three times if it crashes

- Provides file processing summary

- Provides a full operational audit trail

Tetra File-Log Agent Requirements

These are the Tetra File-Log Agent requirements:

- Windows 7 Service Pack 1 (SP1) or later, with TLS 1.2 enabled or Windows Server 2008 R2 SP1 or later, with TLS 1.2 enabled

- The server should have 8 GB of RAM at minimum, though 16GB is recommended.

- The CPU should be at minimum Intel Xeon 2.5.0GHz (or equivalent)

- The Agent copies the source file to Group User temp folder before uploading to TDP. The available space in temp folder should be larger than the maximum file size being uploaded to retain the file temporarily.

- .NET Framework 4.8 (Download Link).

- An Agent has been created from a Generic Data Connector (GDC) or Cloud Data Connector (CDC) on the Tetra Data Platform (TDP). For details about which connection to select, and how to create a connector, see this page.

- The Windows server hosting the Agent requires network access according to its connection mode (chosen in step 6):

- When using a CDC, HTTP(S) access to the TetraScience cloud API - for example, https://api.your-infrastructure-name.tetrascience.com/v1/uda.

- When using a GDC, HTTP(S) traffic to the DataHub port selected when configuring the GDC - for example, https://192.168.1.1:8443/generic-connector/v1/agent.

- To support S3 Direct Upload or Receive Commands, the Agent requires HTTP(S) access to the AWS endpoints listed in 'Endpoints used by agents' on this page.

- When using a CDC, this access must be direct.

- When using a GDC, this access can be provided by installing an L7 Proxy connector in the same DataHub as the GDC. In this case the Agent also requires access to the port selected when configuring the L7 Proxy - for example, https://192.168.1.1:3129.

- The Windows server hosting the Agent has network access (SMB over port 445, TCP and UDP) to any computers whose shared folders need to be monitored, as well as the Group User Account with the necessary access (see below for details).

Known Limitations

Characters in File Path

- The File-Log Agent does not support case-sensitive directory or file names. All paths are converted to lower case when storing in the Tetra Data Platform.

- The File-Log Agent only supports ASCII characters in directory and file names.

- The File-Log Agent does not support a colon “:” or backslash “\” in directory or file names.

File Systems and Protocols

- The File-Log Agent is able to access any local file system and any which may be accessed via supported Windows file share protocols (SMB/CIFS, NFS, Windows DFS) as long as the directories and files do not violate the character restrictions above.

- Depending on the protocol and file system used, file system events may not be supported and the File-Log Agent will rely on polling to detect changes.

- Mapped network drives are not supported.

File Size

- When using the Generic Data Connector or Cloud Data Connector without S3 Direct Upload, the maximum file size which may be uploaded is 500MB.

- When using S3 Direct Upload, the maximum file size which may be uploaded is 5TB.

Additional Notes

- The Tetra File-Log Agent does not support a file path exceeding the default maximum length, which is 260 characters. This is a Windows OS limitation. To enable long paths in the host Windows OS, please refer to the FAQ: How to enable long path for Windows

- The Tetra File-Log Agent is optimized on the latest Windows OS. It supports previous Windows OS as long as the requirements above are met. But the Tetra File-Log Agent will not perform optimally.

- The Tetra File-Log Agent scanning speed is dependent on the number of file and the number of folders it scans. While the Tetra File-Log Agent scans the network drive, the network speed also affects the scanning speed.

- If the network share is non-Windows based, or the network redirector is non-Windows based, the Tetra File-Log Agent scanning speed is also impacted.

- If the files to upload are approximately 500 MB or larger, you must add an L7 proxy connector to the Data Hub.

- By default, a Generic Data Connector is provisioned to have 500 MB of memory. If your file is close to or larger than 500 MB, then the Tetra File-Log Agent may not be able to upload it. While you can adjust the memory allocation for GDC, TetraScience recommends installing the L7 proxy connector.

- If your file system has an additional permissions system, please discuss with TetraScience before installing the Tetra File-Log Agent so we can assist you with agent configuration.

Log Watcher Service

Log Watcher Service detects and extracts new content from the files monitored by the File-Log Agent. The Agent monitors the files or folders from the paths, the associated patterns (Glob patterns) and the Start Date defined in the Windows Management Console. The Start Date is used to exclude the files prior to that date.

The Log Watcher service periodically checks file content changes in the time interval defined in the Log Watcher section in Windows Management Console UI. The change detect logic is based on the following two criteria:

If either of those file attributes is changed, the Agent will start to output the new content from the last line it generates in the previous time.

The maximum length of the output file is 5000 lines as default. System Administrator can modify the setting (Max Batch Size) from the LogWatcher Path Editor Window in Management Console. If the total new content is more than 5000 lines, the Agent will split the output file to multiple smaller files with maximum 5000 lines in each file.

The name convention of the output file is the following:

original_file_name_last_line_number_timestamp.file_extension

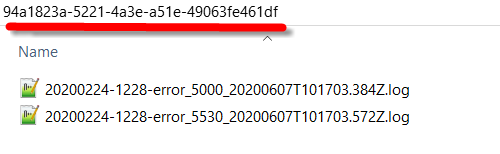

Taking an example, the original filename is 20200224-1228-error.log. That file contains 5530 data lines. The Max Batch Size is 5000. When it is outputted for the first time, the Agent generates two files. Please note the time stamp is based on the current time when the file is created.

20200224-1228-error_5000_20200607T101703.384Z.log20200224-1228-error_5530_20200607T101703.572Z.log

The output files are saved into LogWatcher Output Folder, defined in Log Watcher section in Windows Management Console. To avoid potential file naming collision, the output files related to the same source file are combined into a subfolder. The subfolder name is a unique UUID.

Output files

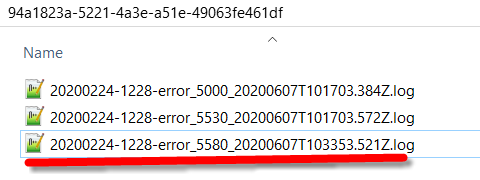

If the instrument appends 50 more data lines to that data file, the Agent will generate an additional file name as 20200224-1228-error_5580_20200607T103353.521Z.log. The output file is saved to the same subfolder with the files generated from previous times. The content of output folder looks like below:

Additional output file

The Agent is going to upload the output files to Tetra Data Platform periodically till they are succeeding.

Once the output files are uploaded successfully to TetraScience Platform, the output files will be moved to the archive folder (LogWatcher Archive Folder). In case, the output files are failed to be uploaded to Tetra Data Platform, the Agent will retry the uploading in the next time interval.

File Watcher Service

Unlike logWatcher service to upload the new content, the fileWatcher service uploads the entire file or folder to the TetraScience platform. If the file has been changed multiple times, the TetraScience Platform could contain multiple versions of the same file.

The Agent monitors the files or folders by using the paths, the associated patterns (Glob patterns) and Start Date defined in the File Watcher Section in Window Management Console. The Start Date is used to exclude the files before that date.

There are two modes, File Mode and Folder Mode, in the File Watcher Service. The logic of detecting the changes is slightly different between these two modes.

File Mode

This mode is to detect the changes from an individual file. File Watcher Service periodically checks the following file metadata and store the result in the local SQLite database:

If either of them has changed, the Agent marks that file as changed.

Folder Mode

This mode is to detect the changes from All of the files in the whole folder, including the subfolders. If any of the files in the folder has been changed, the Agent will upload the entire folder as a compressed file. The Agent periodically checks and stores the following attributes for that folder:

- Total number of files in the folder

- Total size of the files in the folder

- The Last Write Time from the youngest file in the folder

If any of the criteria has changed, the Agent marks that folder as changed.

The path of the changed file or the folder is put into a processing queue. The items in the queue will be processed sequentially.

For those files or folders monitored by the Agent, the Agent checks the attributes of the file or folder in every time interval, compares the result with the ones from the previous time interval, and determines if they are changed and uploaded to Tetra Data Platform.

When the Agent decides the file or the folder is ready to be uploaded, the Agent will move the file or folder Windows temp folder. If it is the folder mode, the folder will be compressed as a zip file.

The output files in the temp folder will be uploaded to the Tetra Platform until they are succeeded. Once succeeded, the files will be removed from the temp folder. The file upload time interval can be specified in the Advanced Settings in the Agent Configuration section.

Updated over 1 year ago