Tetra Amazon S3 Connector v1.x Operational Guide

The following procedure shows how to install and operate a Tetra Amazon S3 Connector v1.x. The Tetra Amazon S3 Connector is a standalone, containerized application that automatically uploads files (objects) from your organization's Amazon Simple Storage Service (Amazon S3) buckets to the Tetra Data Platform (TDP) whenever an object is created.

Install a Tetra Amazon S3 Connector

To install a Tetra Amazon S3 Connector, do the following.

NOTEThe following procedure provides Python libraries and code as examples for you to build off of.

Prerequisites

Before you can create and use a Tetra Amazon S3 Connector, you must have the following:

-

An active TDP environment

-

Knowledge of Python

-

An active AWS account

-

AWS Command Line Interface (AWS CLI) installed and configured

-

An Amazon S3 bucket

-

An Amazon Simple Queue Service (Amazon SQS) queue

-

An Amazon Simple Notification Service (Amazon SNS) topic with an Access Policy that grants

SNS:Publishpermissions to the S3 bucket -

An AWS Identity and Access Management (IAM) role that the Connector can assume (or another IAM permissions method), which grants the following permissions:

Amazon S3 Bucket Permissions

s3:GetObjects3:GetBucketLocations3:ListBuckets3:GetObjectVersions3:GetObjectAttributes

Amazon SQS Queue Permissions

sqs:DeleteMessagesqs:GetQueueUrlsqs:ReceiveMessagesqs:GetQueueAttributes

NOTEThese permissions work with Amazon S3 buckets that are encrypted using the default of server-side encryption with Amazon S3 managed keys (SSE-S3). If your bucket uses server-side encryption with AWS Key Management Service (KMS) keys, you must add the AWS KMS Key permissions to the IAM policy. For more information, see Using IAM policies with AWS KMS in the AWS documentation.

Configure the Required AWS Resources and Permissions

Create an Amazon S3 Bucket

Create an Amazon S3 bucket for object storage by following the instructions in Creating a bucket in the Amazon S3 User Guide. You must create a unique bucket name to set up the required IAM permissions. You don't need to change the bucket's other default values.

Create an Amazon SNS Topic

Create an Amazon SNS topic to deliver S3 events to your Amazon SQS queue by doing the following:

-

Create an Amazon SNS topic by following the instructions in Creating an Amazon SNS topic in the Amazon SNS Developer Guide. You must configure the Type (Standard is recommended), Name, and Access Policy. You don't need to change the topic's other default values.

-

Update the SNS topic's Access policy so that it grants

SNS:Publishpermissions to the S3 bucket by adding the following statement to theStatementlist in the JSON policy:

NOTEValues for the

aws-regionandaws-accountare listed in the equivalent fields within the Access Policy's defaultStatementlist.

{

"Sid": "Policy for S3 event notification",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "SNS:Publish",

"Resource": "arn:aws:sns:<aws-region>:<aws-account>:<this-sns-topic-name>",

"Condition": {

"StringEquals": {

"aws:SourceAccount": "<aws-account>"

},

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:*:*:<target-s3-bucket-name>"

}

}

}

IMPORTANTTo use an encrypted topic, there are additional permissions that must be set in Amazon S3 to allow access to the appropriate AWS Key Management Service (AWS KMS) key that allows for publishing to the topic. For more information, see Why isn’t my Amazon SNS topic receiving Amazon S3 event notifications? in AWS re:Post.

- (Optional) To set up email notifications for when new objects are uploaded to the S3 bucket, follow the instructions in Email notifications in the Amazon SNS Developer Guide.

Set Up Amazon S3 Event Notifications

Configure Amazon S3 bucket event notifications to be sent to your Amazon SNS topic by following the instructions in Enabling and configuring event notifications using the Amazon S3 console in the Amazon S3 User Guide. You will need your SNS topic name to configure the notifications.

When you configure the notifications, make sure that you do the following:

- For Event types, select All object create events.

- For Destination, select SNS topic. Then, select Choose from your SNS topics and locate your SNS topic name.

NOTEIf you receive an

“Unable to validate the following destination configurations”error message, verify that the SNS topic's access policy grantsSNS:Publishpermissions to the correct S3 bucket.

Create a Standard Amazon SQS Queue

To create an Amazon SQS queue to trigger file transfers to the TDP, do the following:

- Create a Standard Amazon SQS queue by following the instructions in Creating an Amazon SQS standard queue and sending a message in the Amazon SQS Developer Guide. You will need your SNS topic ARN to receive messages.

NOTEMake sure that you set a reasonably long Visibility timeout (~10 min) to limit message re-appearance when processing large files.

- Subscribe the queue to your Amazon SNS topic by following the instructions in Subscribing a queue to an Amazon SNS topic using the Amazon SQS console.

IMPORTANTIt's recommended that you also configure a second, dead-letter queue to store undelivered messages, so that the source queue doesn't get overwhelmed with unprocessed messages. For instructions, see Learn how to configure a dead-letter queue using the Amazon SQS console in the Amazon SQS Developer Guide.

Create an IAM Role for the Connector to Assume

NOTEAn IAM role is just one possible method to define the access permissions required for the Tetra Amazon S3 Connector. You can use other methods, too.

Create an IAM role for the Connector to assume that grants the required permissions by following the instructions in Creating a role using custom trust policies (console).

In the Custom Trust Policy section, you can either edit the JSON to include the following policy statement, or select Add a principal > IAM Role and populate the brackets with your TDP environment's information:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowTDPRoleToAssume",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<tdp-aws-account>:role/ts-con-role-<tdp-aws-region>-<tdp-env-type>-<tdp-org-slug>"

},

"Action": "sts:AssumeRole"

}

]

}

IMPORTANTThe Connector must be able to read the source S3 bucket and receive and delete messages from the Amazon SQS queue. You can configure these permissions by allowing [

AmazonS3ReadOnlyAccessandAWSLambdaSQSQueueExecutionRolemanaged policies, or by defining custom policies. For more information, see What are AWS managed policies? in the AWS Managed Policy Reference Guide.

Create a Tetra Amazon S3 Connector

To create a Tetra Amazon S3 Connector, do the following:

- Sign in to the TDP. Then, in the left navigation menu, choose Data Sources and select Connectors. The Connectors page appears.

- Follow the instructions in Create a Pluggable Connector. For CONNECTOR TYPE, make sure that you select AWS S3.

NOTEAfter the Connector is created, the initial MODE status is set to IDLE by default. To activate the Connector, you must configure its settings. For instructions, see the Configure the Connector section.

Configure the Connector

On the Connectors page, select the name of the connector that you created. Then, select the Configuration tab to configure the required settings.

Configuration Parameters

The following table lists the configuration parameters that are required for the Tetra Amazon S3 Connector.

| Configuration Parameter | Data Type | Description | Behavior |

|---|---|---|---|

| SQS URL | String | Amazon SQS URL to monitor for S3 changes | Required |

| Source AWS Region | String | Source S3 bucket's AWS Region, if different from the Amazon SQS queue's Region | Optional |

| Authentication Type | Authentication Settings: - Access Key/Secret - Session Token - IAM Role | Authentication type. There are two possible authorization strategies: - You can provide an access key ID, secret access key, and session token - You can provide an IAM role for the Connector to assume The connector requires privileges: - S3 Bucket: The connector requires ListBucket and GetObject S3 permissions for operation- SQS URL: The connector requires GetQueueUrl, ReceiveMessage, and DeleteMessage SQS permissions for operation | Required. If option selected is: - Access key/secret: both “AWS Access Key” and "AWS Secret Access Key” are displayed and requiring input- Session Token: “AWS Access Key”, “AWS Secret Access Key”, and “Session Token” are displayed and requiring input- IAM Role: Only the IAM Role field is required. IAM identifier of the role that the Connector uses is granted to have access to the S3 bucket. |

| AWS Access Key ID | String | AWS Access Key ID | Required if auth type is Access Key/Secret |

| AWS Secret Access Key | String | AWS Secret Access Key | Required if auth type is Access Key/Secret |

| AWS Session Token | String | AWS Session Token | Required if auth type is Access Key/Secret/Token |

| IAM Role | String | An IAM role that has access to source’s S3 bucket and SQS queue. | Required if auth type is IAM Role |

| Path Configurations | JSON String | A JSON array containing path patterns, TDP sourceType, or labels to be used for TDP parsing.For an example, see the following Example Path Configuration JSON. | Optional If no path configuration is provided, all S3 files will be updated to TDP without sourceType or labels. path: Required. Path of files to upload. If path is empty string, then all files are uploaded sourceType: Required. Source type assigned to uploaded files. pattern: Required. Pattern of files to upload where wildcards are supported. - * will match everything except slashes- ** will recursively match zero or more directories. - ? will match any character except slashlabels: Optional. key-value object to be added as labels to uploaded files. |

Example Path Configuration JSON

[

{

"path": "folder101/",

"sourceType": "instrument",

"pattern": "**/*.txt",

"labels": [

{

"name": "instrument",

"value": "ambr250"

}

]

}

]Review and Edit the Connector's Information

The Information tab on the Connector Details page displays information about the Connector, the files pending, successfully uploaded, or failed. It also provides functionality to edit a Connector’s information, metadata, and tags.

To edit the Connector's information, select the Edit button on the Connector Details page's Information tab.

For more information, see Review and Edit a Pluggable Connector's Information.

Set the Connector's Status to Running

Set the Connector's status to Running by following the instructions in Change a Pluggable Connector's Status.

To pause uploads to the TDP, set the Connector's status to Idle.

NOTEKeep in mind the following:

- The connector can only receive

ObjectCreatedevent types that are stored in Amazon SQS.- The connector polls the SQS queue using the long polling option.

Rather than specifying a polling interval and querying for all events that have occurred since the last request, the Connector constantly monitors the SQS queue waiting for new events.- Events will accumulate in the SQS queue until the Connector is set to Running or the events age out of the SQS queue.

- The queue retention period can be set at queue creation, with a maximum limit of 14 days.

Metrics

After a Connector instance is created and running, you can monitor the Connector’s health by selecting the Metrics tab on the Connector Details page. The Metrics tab displays the Connector's container metrics as well as aggregated states on total files scanned and uploaded as well as files that returned errors or are pending.

Access Data

Once the Connector is running and files from your S3 bucket are uploaded to the TDP, you can access the files in the Tetra Scientific Data and AI Cloud.

For more information about how to access your data, see Search.

Limitations

Configuring Multiple Connectors to Poll from One S3 Bucket

IMPORTANTSpecial AWS configuration is needed to support multiple Connectors pointing to the same S3 bucket. To prevent upload errors to the TDP, it is recommended that each Tetra Amazon S3 Connector points to a distinct SQS queue and S3 bucket.

Reasons for this recommendation include the following:

- The file uploads table in either connector is an incomplete history of file uploads. SQS messages are independently picked from the queue and processed by a Connector, leading to situations where

FileA:v1is uploaded byConnector1, andFileA:v2is uploaded byConnector2. - The file upload status in a connector’s file table may be inaccurate. In the case of processing errors, SQS messages are returned to the queue and can be picked up by another connector. This can lead to situations where

fileA:v1has statusERRORinConnector1and statusSUCCESSinConnector2. - Each Connector must be configured independently. Comparing Connector configurations is a manual process in the TDP, which makes ensuring consistency across a number of Connectors difficult.

- Potential for duplicate file upload to TDP. If the Connector processing time for a file is longer than the visibility timeout for a message, then a file being uploaded by one Connector may also be uploaded by another Connector when the message returns to the SQS queue. The risk of this occurring is greater for large files.

For more information, see the following resources in the AWS documentation:

Process Existing Files in S3 Storage

The S3 Connector relies on ObjectCreated S3 events to trigger file uploads to TDP. Historical files that are already in an S3 bucket are not uploaded to TDP, because there are no accompanying events.

One way of uploading historical files is to generate synthetic ObjectCreated SQS messages, which triggers the TDP upload through the Connector.

The following code example will loop through file objects in S3 and send an ObjectCreated SQS message for files that fit the path and pattern specified in the Connector's Path Configuration.

Path Configuration Details

The following Python script uses the Connector's Path Configuration as an example of how S3 file objects can be filtered to match the specified path and pattern in the Connector's Path Configuration.

NOTEThe Path Configuration is at the bottom of the Configuration tab for your Connector in the TDP.

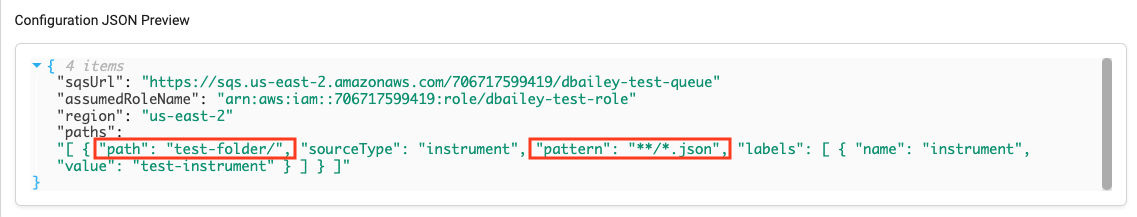

The following screenshot shows the path and pattern that are used as filter conditions in the example Python script.

Example Python Script

NOTEBefore running the example script, make sure that you run

aws sso loginin your terminal and verify AWS access. If you don’t log in, you will receive credential errors.

import boto3

import json

import fnmatch

import re

from datetime import datetime

# Initialize AWS Session, S3, and SQS

session = boto3.Session(profile_name = '<AWS_PROFILE_NAME>')

s3 = session.client('s3')

sqs = session.client('sqs')

# Define S3 Bucket, SQS, and get a list of S3 File Objects

s3_bucket_name = "<S3_BUCKET_NAME>"

sqs_url = "<SQS_URL>"

s3_config = s3.get_bucket_notification_configuration(Bucket = s3_bucket_name)['TopicConfigurations']

s3_objects = s3.list_objects(Bucket = s3_bucket_name)

# Loop through S3 Objects and create synthetic SQS messages for desired files

for file_idx in s3_objects['Contents']:

file_object = file_idx['Key'] # 'Key' is the S3 File path + name (IE: test-folder/example.json)

# Can add additional code to process desired files.

# file_object has additional metadata that can be leveraged within 'Contents'

# filter by `path` from connector 'Path Configurations'

path_filter = 'test-folder/'

# filter by `pattern` from connector 'Path Configurations'

pattern_filter = fnmatch.translate('**/*.json')

regex = re.compile(pattern_filter)

#apply filters for `path` and `pattern` then create and send SQS message

if path_filter in file_object and regex.match(file_object):

timestamp = datetime.now().strftime("%Y-%m-%dT%H:%M:%S")

sqs_message = json.dumps(

{

'Records':[

{

'eventVersion': '2.0',

'eventSource': 'aws:s3',

'awsRegion': s3.meta.region_name,

'eventTime': timestamp,

'eventName': 'ObjectCreated:Put',

'userIdentity': {

'principalId':s3.meta.config.user_agent

},

's3': {

'configurationId': s3_config[0]['Id'],

's3SchemaVersion': '1.0',

'object': {

'eTag': file_idx['ETag'],

'key': file_object,

'size': file_idx['Size']

},

'bucket': {

'arn': 'arn:aws:s3:::{}'.format(s3_bucket_name),

'name': s3_bucket_name

}

}

}

]

}

)

sqs.send_message(QueueUrl = sqs_url, MessageBody = sqs_message)For more information about the boto3 python package, see the Boto3 documentation. For more examples, see Amazon SQS examples using SDK for Python (Boto3) in the AWS SDK Code Examples Code Library.

Troubleshooting

Error Messages

| Error Code | Error Message |

|---|---|

AuthenticationError | Failed to authenticate. Check your AWS credentials and try again. |

S3AccessDenied | The Connector does not have permission to access the S3 bucket. Check your Connector and AWS configurations and try again. |

SQSQueueNotFound | SQS queue not found. Check your Connector and AWS configurations and try again. |

SQSAccessDenied | The Connector does not have permission to access the SQS queue. Check your connector and AWS configurations and try again. |

UnknownError | Unknown error encountered. Check the Connector's logs for more details. |

Monitor the Connector's Health

For statistical information on the health of the Connector, see Monitor Cloud Connectors Health.

Documentation Feedback

Do you have questions about our documentation or suggestions for how we can improve it? Start a discussion in TetraConnect Hub. For access, see Access the TetraConnect Hub.

NOTEFeedback isn't part of the official TetraScience product documentation. TetraScience doesn't warrant or make any guarantees about the feedback provided, including its accuracy, relevance, or reliability. All feedback is subject to the terms set forth in the TetraConnect Hub Community Guidelines.

Updated about 1 month ago