Tetra Signals Connector

The Tetra Signals Notebook Connector integrates Revvity Signals Notebook with the Tetra Data Platform (TDP), including the Tetra Data Lake and other Tetra Integrations. The Connector allows scientists to leverage the benefits of using the Tetra Scientific Data and AI Cloud without needing to leave their electronic lab notebook (ELN).

Design Overview

The Tetra Signals Notebook Connector transfers data between Signals Notebook and the TDP by using Signals Notebook's ability to allow users to trigger on External Actions for Admin Defined Tables.

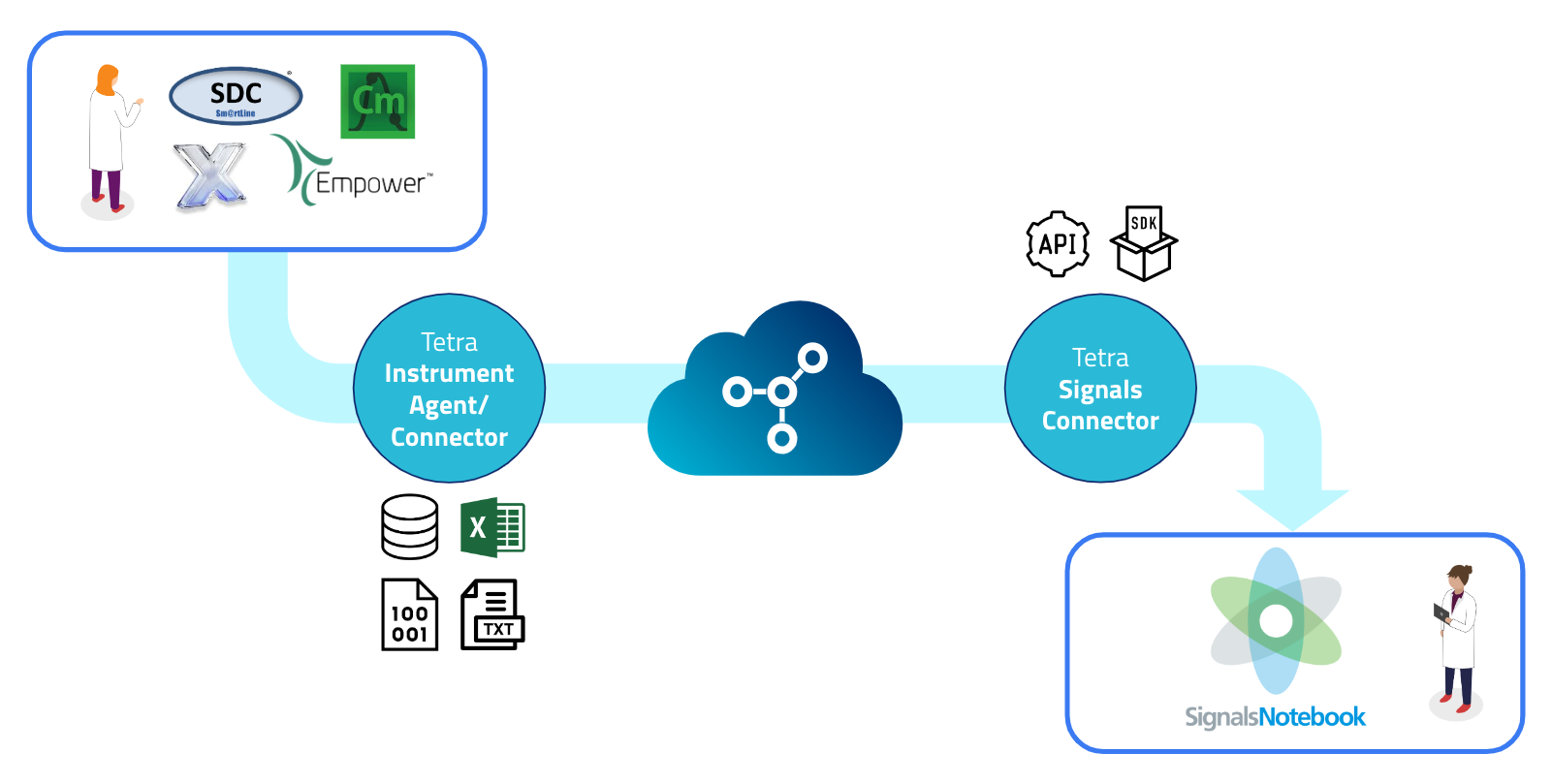

The following diagram shows an example Tetra Signals Notebook Connector workflow.

Figure 1. Illustration of data flow from instruments to the Tetra Data Platform (TDP), and to Signals Notebook.

The diagram shows the following process:

- A scientist begins an experiment preparation in their Signals Notebook Admin Defined Table and initiates an external action to send the spreadsheet data to the TDP throught the Connector.

- Tetra Data Pipelines transform this data into an experimental method to be sent to other Tetra Integrations to be transferred to a compatible instrument control system.

- The scientist starts the experiment with method information pre-populated and initiates data generation from the instruments that are integrated with the TDP.

- Tetra Integrations upload the data to the TDP.

- After the data is ingested by the TDP and transformed into a harmonized Intermediate Data Schema (IDS), Signals Notebook users can press an external action button in the Admin Defined Table to populate the table with data pulled from the TDP.

NOTEThe Tetra Signals Notebook Connector is responsible for running steps 1 and 5, and can be added as custom actions to the desired Admin Defined Tables within the Signals Notebook administration settings. For more information and best practices, see Revvity Signals in the TetraConnect Hub. For access, see Access the TetraConnect Hub.

Main Features

- Seamless user experience by integrating with Signals Notebook's External Actions

- Integration with the Tetra Data Lake to pull in instrument data and results integrated via other Tetra Agents and Connectors

- Ability to leverage Tetra Command Service APIs to send method and sequence create commands to Tetra Agents for Chromatography Data Systems

- Flexible configuration of External Actions via self-serviced DataWeave scripts

- Logical organization of self-serviced scripts for Action management

Operations and Use Cases

Tetra Signals Connector was designed to help scientists improve their workflows of integrating their ELN data and their instrument results data. With the TDP as the power-house behind user-action events, scientists are able to harness the power of FAIR data.

Leveraging Tetra Data Lake's /searchEql API and DataWeave, customers can easily configure Signals Notebook External Actions to:

- Upload Data from an Admin Defined Table to the Tetra Data Lake

- Pull Data from the Tetra Data Lake into an Admin Defined Table

- Send a Sample Set Method to Waters Empower CDS

- Execute a Sample Measurement to a Simple Balance

Connector Concepts

DataWeave

The DataWeave language is a functional language for performing transformations between different representations of data. It can take XML, CSV or JSON data and convert it to a supported DataWeave format. This functionality provides a powerful tool to programmatically tailor exactly how your data in the TDP should be mapped to specific Signals Notebook spreadsheets while minimizing the security risks that a language like Python might have.

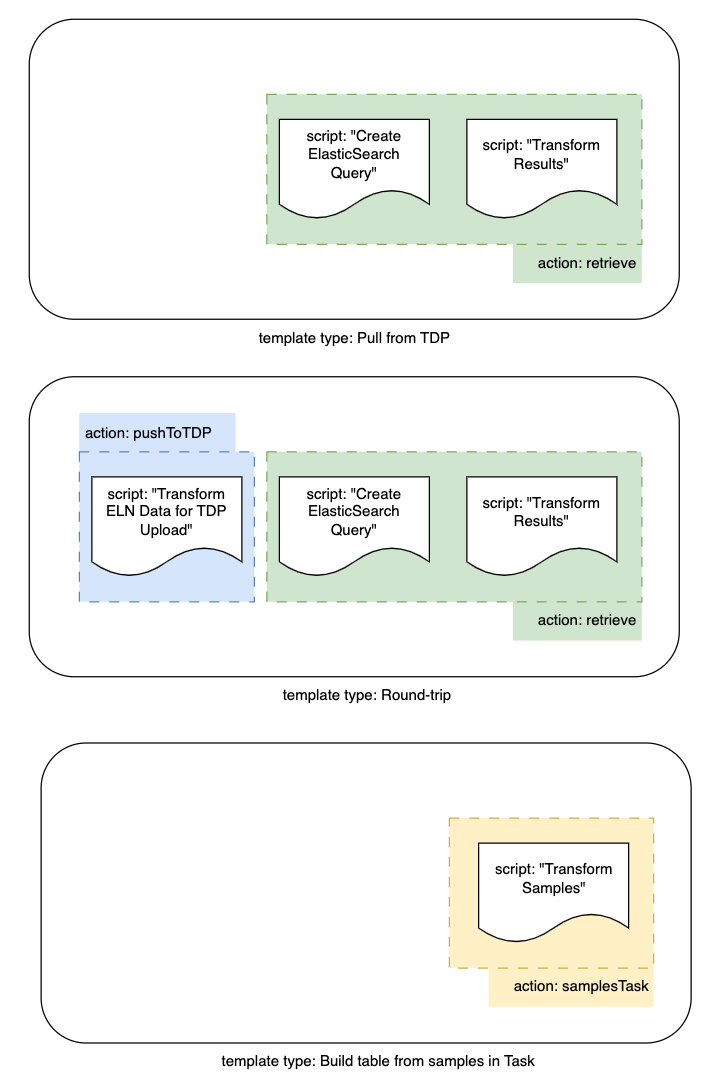

Actions, Scripts, and Templates

- Action: An action is what is to be performed by the trigger of the Signals Notebook External Action. An action will execute one or more scripts.

- Script: A script holds the code logic (DataWeave or Query DSL query) that is to be executed as part of an action call. A script must be part of a template.

- Template: An organizational construct that holds the logical scripts for that specific template type.

A user starts by first selecting from a pre-defined list of template types. They then save their desired business logic encoded into each script, and finally set up the Signals Notebook External Action to call a specific template uuid and the associated action.

Figure 2: Relationship between Templates, Scripts, and Actions

Action Configuration

The Signals Connector supports four external actions that use customer-provided DataWeave scripts for the business logic:

pushToTDP: Uploads the contents of the Signals Notebook Admin Defined Table to the Tetra Data Lake with a custom file path and attributes.retrieve: Searches the Tetra Data Lake with custom Query DSL queries constructed from the Signals Notebook table contents, and allows for custom transform of the retrieved file content into a Signals Notebook table.retrievePlate: Structurally very similar toretrieve, but targets a Signals Plate Container instead of an admin-defined table.samplesTask: Looks up the individual properties of each sample, and compiles them into a table to write back to a Signals Notebook admin-defined table.

Once a template is fully filled out with the appropriate DataWeave scripts, you can configure Signals Notebook External Actions by using the following Action names. External Actions are customizable through Signals Notebook System Configuration. The expected URL will follow the following format for all of TetraScience’s Signals Notebook Connector supported Actions:

<Connector Host Domain including port>?templateKey=<Template UUID>&action=<Action type>

Expected URL Example

https://the TDP-signals-Connector.local:3013?templateKey=69759c97-91e1-4a7a-8d8f-29b0dd92052d&action=pushToTDP

For details on the Signals External Action configuration interface, see Signals Connector Admin Configuration.

Actions

Table 1: Requirements for what scripts are needed per action.

Actions | Script: Transform ELN Data for the TDP Upload | Script: Create ElasticSearch Query | Script: Transform Results | Script: Transform Samples |

|---|---|---|---|---|

| ✅ | |||

| ✅ | ✅ | ||

| ✅ | ✅ | ||

| ✅ |

pushToTDP

pushToTDPSupported templates:

- Round-trip

Description:

This action assumes that it has been triggered by a Signals Notebook admin-defined table. It gets the contents of the table, and then uses the upload script to transform that into a command to send to the TDP command service. Upon successful receipt of the command, the action finishes.

Expected inputs in Signals External Action:

templateKey: UUID of the DataWeave template to callaction:pushToTDPtoDatalake(optional): if this is present – specified using a search paramtoDatalake=truein the Signals Notebook external action URL. Then, instead of sending a command to the TDP command service, the Connector will try to upload part of its DW output to the TDP Datalake as a file. See the following section for details.

NOTETo use this mode, the TDP configuration section of the Connector must include a user-defined Agent ID.

retrieve

retrieveSupported templates:

- Round-trip

- Pull from the TDP

Description:

This action assumes that it has been triggered by a Signals Notebook admin-defined table.

Proposed updates are validated, and then run through a conflict checker that looks for attempts to write multiple inconsistent values to the same place in the target table. If there are no conflicts, the action attempts to push updates to Signals Notebook. If there are conflicts, they require resolution based on user choices from the front-end.

Expected inputs in Signals External Action:

templateKey: UUID of the DataWeave template to callaction:retrieve

retrievePlate

retrievePlateSupported templates:

- Round-trip

- Pull from the TDP

Description:

This action assumes that it has been triggered by a Signals Notebook plate container.

This action works very similarly to retrieve, but targets plate containers rather than admin defined tables. Each update should represent a single annotation layer of a single plate in the container. For this action, conflicts occur whenever more than one file is attempting to update the same annotation layer

Expected inputs in Signals External Action:

templateKey: UUID of the DataWeave template to callaction:retrieve

samplesTask

samplesTaskSupported templates:

- Build table from samples in Task

Description:

This action assumes that it has been triggered by a Signals Notebook Task. As part of task creation, a number of Signals Notebook Samples can be linked to a task. The action looks up the individual properties of each sample, and then compiles them into a table to write back to a Signals Notebook admin-defined table. There is no conflict checking performed as part of this action.

Expected inputs in Signals External Action:

templateKey: UUID of the DataWeave template to callaction:samplesTaskadtTemplateName: the action will create a new table based on the Signals Notebook template with this name.writeTableToRequester: (optional) Signals Notebooks Tasks can have two associated experiment IDs- a request link, corresponding to where the Task was originally created

- an experiment link, corresponding to where the Task was assigned

- Note: The default behavior is to write to the experiment link when it is available. If you instead wish to always write to the request link, you can include the search param writeTableToRequester

Scripts

Transform ELN Data for the TDP Upload (upload)

upload)Inputs

payload: the contents of the Signals Notebook entity that initiated the action, along with one additional fieldfullEntityInfo. What is typically of interest infullEntityInfois the information about the experiment the Signals Notebook entity lives in. The ID can be discovered fromfullEntityInfo.data.relationships.ancestors.data, with more complete information about the experiment available infullEntityInfo.included

Example for fullEntityInfo.data.relationships.ancestors.data:

[

{

"type": "entity",

"id": "experiment:01234567-89ab-cdef-0123-456789abcdef",

"meta": {

"links": {

"self": "https://somesandbox.signalsnotebook.revvity.cloud/api/rest/v1.0/entities/experiment:01234567-89ab-cdef-0123-456789abcdef"

}

}

}

]Example for the corresponding entry in fullEntityInfo.included:

{

"type": "entity",

"id": "experiment:01234567-89ab-cdef-0123-456789abcdef",

"links": {

"self": "https://somesandbox.signalsnotebook.revvity.cloud/api/rest/v1.0/entities/experiment:01234567-89ab-cdef-0123-456789abcdef"

},

"attributes": {

"type": "experiment",

"eid": "experiment:01234567-89ab-cdef-0123-456789abcdef",

"name": "Test Experiment",

"digest": "44600428",

"fields": {

"Description": {

"value": "test"

},

"Name": {

"value": "Test Experiment"

},

"Organization": {

"value": "internal"

},

"Project": {

"value": "[\"Project 1\"]"

}

},

"flags": {}

}

}Expected outputs

- If not using

toDatalake: A payload for the POST endpoint of the TDP command service. The inner payload format will depend on the specific integration and command choices. Example:

{

targetId: string, // agent or Connector id

action: string,

metadata?: { [key: string]: string | number },

expiresAt: string, // ISO UTC timestamp,

payload: any

}- if using

toDatalake:

{

filePath?: string, // the TDP file path; defaults to '/signals/uploads/upload-<random-uuid>' if missing

sourceType?: string, // the TDP source type, must be lowercase letters and hyphens; defaults to `signals-Connector` if missing

metadata?: Record<str, str>. // custom metadata, ASCII characters only for key and val

tags?: string[], // custom tags, must be unique, ASCII characters (and no commas)

data: any // this will be the actual file uploaded to the TDP

}

File Size LimitationsThe maximum file size supported by the TDP endpoint used is 500 MB.

Attribute Size LimitationsThe total number of characters in the JSON representations of metadata and tags must be fewer than 1536. For more context on these constraints, see https://developers.tetrascience.com/reference/user-defined-integration-file-upload

Create ElasticSearch Query (query)

query)Inputs

payload: The contents of the Signals Notebook entity that initiated the action.experimentData: Contains information about the experiment hosting the Signals Notebook entity that initiated the action.

Expected outputs

- A single valid query.

Transform Results (results)

results)Skip retrieve option: In full generality, results will need to download files from the TDP. However, for many use cases the information in the indexed OpenSearch document is sufficient. In this case, it is substantially faster to just transform the OpenSearch hits themselves. To skip the individual file downloads, results should contain the string //@ts-skip-retrieve. The double slashes mark it as a comment to DW, and the string can occur anywhere a DW comment is syntactically valid. A good convention is to include it as a line early in the DW header. With skip retrieve, one DW transform is applied to the list of all OpenSearch hits. Without skip retrieve, one DW transform is applied to each OpenSearch hit and its associated downloaded file.

Inputs - using skip retrieve:

payload_1: the list of hits from OpenSearch. Looking at the body of the OpenSearch response, this corresponds tohits.hitspayload_2: the contents of the Signals Notebook entity that initiated the actionpayload_3: (optional, forretrieveonly) only exists whenoriginTableis set, in which casepayload_2is the contents oforiginTableandpayload_3is the contents of the target table. (Although this table is freshly created, we still want its contents to do things like map column names to column UUIDs.)- 'experimentData': contains information about the experiment hosting the Signals Notebook entity that initiated the action

Inputs - without skip retrieve:

payload_1: a single hit from OpenSearchpayload_2: the full file corresponding to the hit given inpayload_1payload_3: the contents of the Signals Notebook entity that initiated the actionpayload_4: (optional, forretrieveonly) only exists whenoriginTableis set, in which casepayload_3is the contents oforiginTableandpayload_4is the contents of the target table. (Although the target table is freshly created and lacking data, the Connector still uses its contents to do things like map column names to column UUIDs.)- 'experimentData': contains information about the experiment hosting the Signals Notebook entity that initiated the action

Expected outputs -- retrieve

For retrieve, the DataWeave script should return a list containing elements of type SingleValidUpdate, described below:

type SingleValidUpdate = SingleADTUpdate | SingleAttachmentUpdate;

type SingleADTUpdate = {

id: string,

fileId: string,

filePath: string,

summary?: any,

row: GridRowChange

};

type GridRowChange = GridRowUpdate | GridRowCreate | GridRowDelete;

type GridRowUpdate = {

id: string,

type: "adtRow",

attributes: {

action: "update",

cells: GridCell[]

}

};

type GridRowCreate = {

type: "adtRow",

attributes: {

action: "create",

cells: GridCell[]

}

};

type GridRowDelete = {

id: string,

type: "adtRow",

attributes: {

action: "delete"

}

};

type GridCell = {

key: string,

content: GridCellContent,

type?: string,

name?: string,

};

type GridCellContent = {

value?: number | string,

display?: string,

units?: string,

type?: string,

values?: string[]

};

type SingleAttachmentUpdate = {

id: string;

fileId: string;

filePath: string;

summary?: any;

attach: boolean; // set to true if you want to upload file to Signals

};- With this format, each update should correspond to either:

- edits to a single row of admin-defined table, or

- a file attachment to send to the experiment containing the admin-defined table. The file name in Signals will be determined by the final segment of the TDP filePath. Depending on the file name extension, a preview of the contents may be available in Signals.

- The

idof an update is relevant to the conflict checker. If two updates have different IDSs, then they will never be checked for conflicts. Given this style of update, creating rows shouldn’t create conflicts and can just take a random UUID as ID. A convention of using Update<row-uuid>will catch conflicts associated with multiple updates to a single row. summarycontrols a table rendered in theResultInfocomponent in the front end. It is a place to put key-value pairs that would be useful to users to quickly understand what the data is, especially for conflict resolution. The choice of which key-value pairs to include is left to the DW author.

Expected outputs -- retrievePlate

For retrievePlate, the output is slightly different

type PlateContainerUpdate = {

fileId: string,

filePath: string,

plateId: string,

annotationLayer: string,

layerData: { data: PlateUpdateData },

summary?: any

}

type PlateUpdateData = {

type: 'plate',

id: string, // Signals entity ID of plate, e.g. Plate-1

attributes: PlateUpdateDataAttributes

}

type PlateUpdateDataAttributes = {

plateId?: string, // user definable, more likely to be e.g. a barcode

annotationLayers: AnnotationLayerUpdate[] // must be length 1 -- this is an array, but Signals API only accepts 1 layer

}

type AnnotationLayerUpdate = {

id: string, // internal Signals identifer, not annotation layer name

wells: {

wellId: string, // A1, B2, etc.

content: {value: string | number} | {type: string, user: string, value: string}

}[]

}- In the Signals UI, the plate container UI will show a summary table of all the plates in the container. An "annotation layer" corresponds to a column of this table. Updates should correspond to modifications to a single annotation layer in a single plate.

- At the top level of an update,

plateIdandannotationLayercan be user-friendly names. Internally, the Connector will append anidfield to the update that is these two entries concatenated. So, if you haveplateIdof "TetraPlate" andannotationLayerof "Cell Count", results will be shown in the Connector under "TetraPlate-Cell Count". - Within

layerData, references to the ID of plate and annotation layer must correspond to the Signals internal ID of these entities, not user-friendly names. The input payload with the plate contents can be used to look up correct IDs given the user-friendly versions - Two updates are in conflict if they come from different files but have the same values for

plateIdandannotationLayer. - One file can produce updates to multiple annotation layers, but it cannot produce multiple updates to the same annotation layer. For example, you can't write your update to an annotation layer from one file as a series of updates that do one well at a time.

-

Transform Samples (transformSamples)

transformSamples)Inputs

payload_1: the properties of all samples listed in the task. This will have typeGetSamplePropertiesResponseBody[]payload_2: the contents of the newly created admin-defined table that we will write to.

Expected outputs

The DataWeave script should return a list of type SingleValidUpdate[]. Elements are described below:

type SingleValidUpdate = SingleADTUpdate | SingleAttachmentUpdate;

type SingleADTUpdate = {

id: string,

fileId: string,

filePath: string,

summary?: any,

row: GridRowChange

};

type GridRowChange = GridRowUpdate | GridRowCreate | GridRowDelete;

type GridRowUpdate = {

id: string,

type: "adtRow",

attributes: {

action: "update",

cells: GridCell[]

}

};

type GridRowCreate = {

type: "adtRow",

attributes: {

action: "create",

cells: GridCell[]

}

};

type GridRowDelete = {

id: string,

type: "adtRow",

attributes: {

action: "delete"

}

};

type GridCell = {

key: string,

content: GridCellContent,

type?: string,

name?: string,

};

type GridCellContent = {

value?: number | string,

display?: string,

units?: string,

type?: string,

values?: string[]

};

type SingleAttachmentUpdate = {

id: string;

fileId: string;

filePath: string;

summary?: any;

attach: boolean;

};Templates

The actions supported by the Signals Connector each use separate DataWeave scripts at different steps of the process. Templates help organize DataWeave scripts into logical groupings as guided by business or scientific workflows. Three distinct types of templates are available:

Table 2: Available template types and their supported action types.

| Template Type | Supported Action Types |

|---|---|

roundTrip | pushToTDP, retrieve, retrievePlate |

pullFromthe TDP | retrieve, retrievePlate |

samplesTask | samplesTask |

Round-trip

Supported Signals Notebook entity type:

- Admin-defined table

Component scripts:

- “Transform ELN Data for the TDP Upload” –

upload - “Create Elasticsearch Query” –

query - “Transform Results” –

results

Use case: This template is for round-trip data flow. A representative use case would be sending data to the TDP to set up a chromatography run, and later retrieving the data to Signals Notebook. This is implemented as two separate external actions in Signals Notebook:

- The first action

pushToTDPuses the script "Transform ELN Data for the TDP Upload" to convert ELN output into a payload for the TDP command service (the names are artifacts from when this genuinely was uploading a file to the TDP). - The second action

retrieveuses the scripts “Create Elasticsearch Query” and “Transform Results”. The query script takes data from the initiating admin-defined table and forms an Elasticsearch query to submit using the TDP API. The results script takes those hits and downloaded files and converts them into a payload for the Signals Notebook admin-defined table bulk update API endpoint.

Pull from the TDP

Supported Signals Notebook entity type:

- Admin-defined table

Component scripts:

- “Create Elasticsearch Query” –

query - “Transform Results” –

results

Use case: This template is for pulling data from the TDP to Signals Notebook. It uses a single external action retrieve, which behaves as documented in Round-trip.

Build table from samples in Task

Supported Signals Notebook entity type:

- Task

Component scripts:

- “Transform Samples” –

transformSamples

Use case: This template is called by a Signals Notebook Task, extracts samples from the "Reference ID" column of the task, and uses them to build a table to send back to Signals Notebook. It uses a single external action.

- The external action uses the script "Transform Samples”. This script transforms the obtained samples into the appropriate form to send back to Signals Notebook as an admin-defined table. The Connector will create a new table, with a Signals Notebook admin-defined table template specified by search param. The table can either be in the experiment that initially created the Task, or in the experiment where an assigned Task has its external action clicked. This choice is also specified using a search param.

Updated 2 months ago