Self-Service Pipelines

A self-service pipeline is a custom pipeline that you build yourself, using the TetraScience Software Development Kit (SDK).

Are custom scripts available in my environment?Custom scripts are only available in select single-tenant instances of the Tetra Data Platform (TDP). Please speak to your customer success manager if you are interested in this feature.

This page lists prerequisites, an introductory overview, as well as a detailed walk through for creating a task script and a master script. It also provides important details about artifacts.

Prerequisites

- Building your own pipeline requires that you know how to program in Python and JavaScript. If you aren't familiar with Python, consider using one of our standard Out Of the Box (OOB) pipelines or by contacting Professional Services.

- Review the Pipeline Overview to learn more before starting.

- To better understand the folder structure, first set up your environment by following the Setup and Deploy Instructions Steps 1 and 2.

Introduction

To run a data processing script on Tetra Data Platform (TDP), you need to create a master script and at least one task script. A master script can have one or more steps. Each step is a piece of logic you want to perform. And each step invokes a function defined in a task script.

Master scripts are written in JavaScript (NodeJS) to orchestrate all the tasks to ensure they run in the correct order. Task scripts can be written in Python.

This document explains how to create a task script and a master script. After both scripts are defined, it covers how to setup and deploy scripts to TDP.

Create a Task Script

When you initialized the TS SDK in Step 2 of the Set Up and Deployment Instructions, you created several folders including the task script folder.

A task script folder contains the following files:

config.json- required, describes all the entry-point functions invokable from the master script.- Python scripts - required, contains your business logic. Conventionally, the entrypoint file is usually named

main.py). README.md- provides documentation on the scripts.requirements.txt- specifies third-party Python modules needed.

Here is an illustrative example:

{

"language": "python",

"functions": [

{

"slug": "process_file", // (1)

"function": "main.process_file" // (2)

}

]

}def process_file(input: dict, context: obj):

"""

Logic:

1. Get input file length

2. Get offset from pipeline config

3. Write a text file to Data Lake

Args:

input (dict): input dict passed from master script

context (object): context object

Returns:

None

"""

print("Starting task")

input_data = context.read_file(input["inputFile"]) # 1

length = len(input_data["body"])

offset = int(input["offset"]) # 2

context.write_file( # 3

content=f"length + offset is {length + offset}",

file_name="len.txt",

file_category="PROCESSED"

)

print("Task completed")Basic demo task script

======================config.json

This file exposes all the functions you want to invoke from master scripts.

For each object in functions array, slug (1) is a name you defined that will be used to invoke the function from the master script. It must be unique within this task script. Conventionally, the slug is the same as the name of the Python function.

function (2) is a reference to the Python function, including the module where it’s defined separated by a dot .. In this case, it refers to process_file function in main.py module.

main.py

In this example, process_file is the entrypoint to the main business logic. We can see there are two arguments passed in - input and context.

input is defined in the master script and input["inputFile"] is a reference to a file in the data lake (more in Master Script section).

context provides necessary APIs for the task script to interact with the TDP. You can see in this example that:

- It first reads the file using the

context.read_filefunction (1). - Then, it gets the

offsetfrom the input object (2). Note:offsetis passed in from the master script (more in Master Script section). - Lastly, it writes a new file to Data Lake using

context.write_filefunction (3). You can find allcontextAPI definitions in Context API doc.

Now that we have created a straightforward task script, we can create a master script.

requirements.txt

You will most likely use some third-party Python modules in your Python scripts. You will need to create a requirements.txt file and put it in the root of your task script folder (at the same level as config.json). Then during the build process, the builder will install those packages as well.

To generate requirements.txt, if you are using pipenv, you can run:

pipenv lock -r > requirements.txtif you are using pip, you can run:

pip freeze > requirements.txtIf you are not familiar with how the requirements.txt file works, there are many resources online, including https://packaging.python.org.

Create a Master Script

Create another folder for the master script (if you completed item 2 in Pre-requisites, it's already created). A master script folder requires two files:

script.js- required, Describes the order in which to invoke the task script functions.protocol.json- required, Describes the pipeline configuration parameters.

Note: "protocol" and "master script" share similar meanings. "master script" is a TetraScience-defined term while "protocol" is easier for anyone to understand.

Here’s an example master script:

{

"protocolSchema": "v2", // protocol schema version, please use "v2"

"name": "offset length", // protocol name displayed on UI

"description": "A demo", // protocol description displayed on UI

"steps": [

{

"slug": "process_file",

"description": "Get file content length, add offset, write ",

"type": "generator",

"script": {

"namespace": "private-<your_org_slug>",

"slug": "offset-length",

"version": "v1.0.0"

},

"functionSlug": "process_file",

}

],

"config": [

{

"slug": "offset", // variable name referred in task script

"name": "offset", // human-readable name displayed on UI

"type": "number", // enum ["string", "boolean", "number", "object", "secret"], more on "object" and "secret in Pipeline Configuration section"

"step": "process_file", // display which step this config belongs to on UI. Has to match one "slug" in "steps"

"required": true, // If true, this config param is mandatory on UI

}

]

}/**

* @param {Object} workflow

* @param {function(path: string)} workflow.getContext Returns context prop by path

* @param {function(slug: string, input: Object, overwrite: Object = {}): Promise<*>} workflow.runTask Runs a task

* @param {{info: Function, warn: Function, error: Function}} utils.logger

* @returns {Promise<*>}

*/

async (workflow, { logger }) => {

// custom logic starts

logger.info('here we start the workflow...');

const { offset } = workflow.getContext('pipelineConfig');

await workflow.runTask(

"process_file", // (1)

{

inputFile: workflow.getContext("inputFile"), // (2)

offset, // (3)

// other things you want to pass to task script

}

);

};protocol.json

The example above illustrates the most common usage pattern - a master script that runs a single step - process_file.

The meaning of protocolSchema, name, description is commented in the code.

Let's look at steps. It's an array of objects and each object is a step in the protocol. The meaning of the key fields are:

slug - Name you define for this step.

type - Please use "generator".

script - Contains information about the task script. Using this information, the master script is able to refer to the task script used.

namespace- Each organization on TDP will have its own namespace, and it will have a prefix of "private-". If your org slug is "tetra", your namespace will be "private-tetra".slug- Name of your task script folder.version- Used for version control, it is your task script version.

functionSlug- Function you want to invoke from the task script as defined in config.json in task script folder.

Please refer to artifacts to learn how your custom scripts are deployed as an artifact.

script.js

The script does 2 things: gets the pipeline configuration and runs the task.

The first argument to the function is workflow. The workflow object passed to the master script is analogous to the context object passed to the task script - it lets you interact with the platform. It supports the following APIs:

workflow.getContext("inputFile")- Returns the input file that triggered the pipeline.workflow.getContext("pipelineConfig")- Returns pipeline config object.workflow.runTask(stepSlug, input)- Runs a specific task script. It uses the step slug from protocol.json.

Note: You can also get pipeline config from the context object in the task script. However, we recommend passing the pipeline config from the master script to make the task script less dependent on context. It's also easier to write tests for your task script.

logger can be used for logging messages you want to display on the UI when a workflow is run. The logger supports 4 levels: "debug", "info", "warn", "error".

Understanding Artifacts

The pipeline.json and script.js files refer to task scripts using a namespace, slug and version. In the platform, this type of three-part identifier is also used to identify and organize other “artifacts” such as master scripts and IDS schemas.

Namespace

Namespaces are labeled following a specific naming convention:

commonis used for artifacts published by TetraScience and that are accessible to all organizations.client-xyzis used for artifacts published by TetraScience and that are accessible to organizationxyz.private-xyzis used for artifacts published by organizationxyzfor their own use.

When creating custom pipelines, onlyprivate-xyz namespaces are supported.

Organizations with slugs starting with xyz, for example, xyz-a, xyz-anything can access artifacts in namespace common, client-xyz and private-xyz. Organizations with slugs starting with xyz can only publish artifacts to namespace private-xyz.

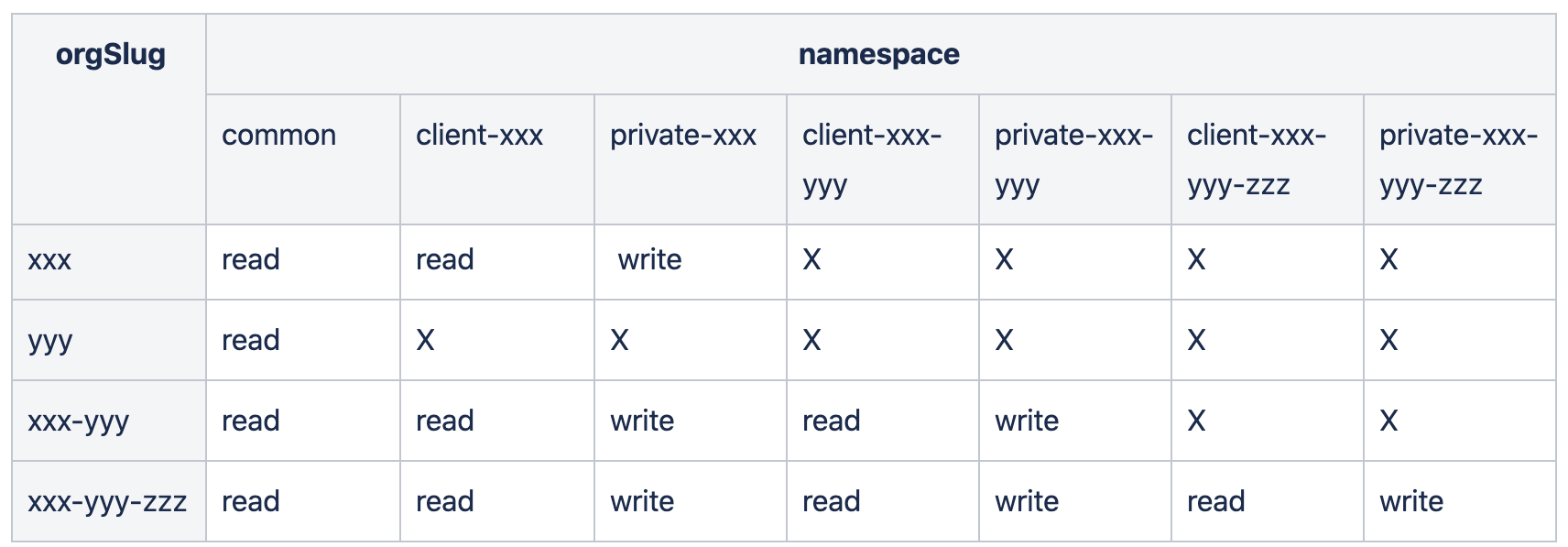

Whether your org will have the access to certain artifacts depends on the namespace the artifact belongs to. The table below shows you the access rules for orgs to artifacts defined in certain namespaces.

Notes:

readaccess is automatically granted if you havewriteaccess.- Only admins of the organization can deploy artifacts.

Updated about 2 months ago