Overview

Introduction

All automated data operations and transformations are handled by the Tetra Data Pipelines.

The most commonly used pipelines parse and standardize raw data from instruments, cloud repositories, and other laboratory data sources. The output files are indexed according to a predefined schema and stored in the Data Lake, where they can be easily searched and filtered via the web UI.

TetraScience also supports Pipelines for:

Data transformation e.g. adding a calculated field to the standard data fields

Data transportation e.g. uploading results to an external ELN or LIMS

NOTE:Raw files that do not pass through a Data Pipeline are still searchable using Default and Custom Metadata fields but are not parsed unless they go through a Data Pipeline.

Terminology

Figure 1. What is a Pipeline

In the Tetra Data Platform, pipelines are a way to configure a set of actions to happen automatically each time new data is ingested into the data lake. More precisely, a pipeline consists of:

- Trigger

- Master Script

- Notification

- User-defined fields - pipeline name, description, metadata, tags

Trigger: the criteria a newly ingested data file must satisfy in order to run the actions. Every time there is a new file or file version in the data lake, the Data Pipelines will check if the new file matches any trigger conditions. Admins may configure the trigger directly through the TetraScience User Interface by navigating to the Pipeline page via the main dropdown menu and defining a set of file conditions.

When an event matches the trigger condition of the pipeline, a workflow will be created based on the workflow definition (a.k.a Master Script) of the pipeline.

Workflow: an execution of a pipeline, contains multiple steps, each step will execute a Task Script.

Master Script: workflow definition, describes what Task Scripts are used and what user configuration is available (on the UI it's displayed as "Protocol").

Task Script: describes how to run a task. It’s where the business logic lives (i.e. data transformation)

Notification: allows you to configure push notifications when a pipeline is finished (completed or failed)

PermissionOnly administrators of an organization can define, modify and disable or enable pipelines.

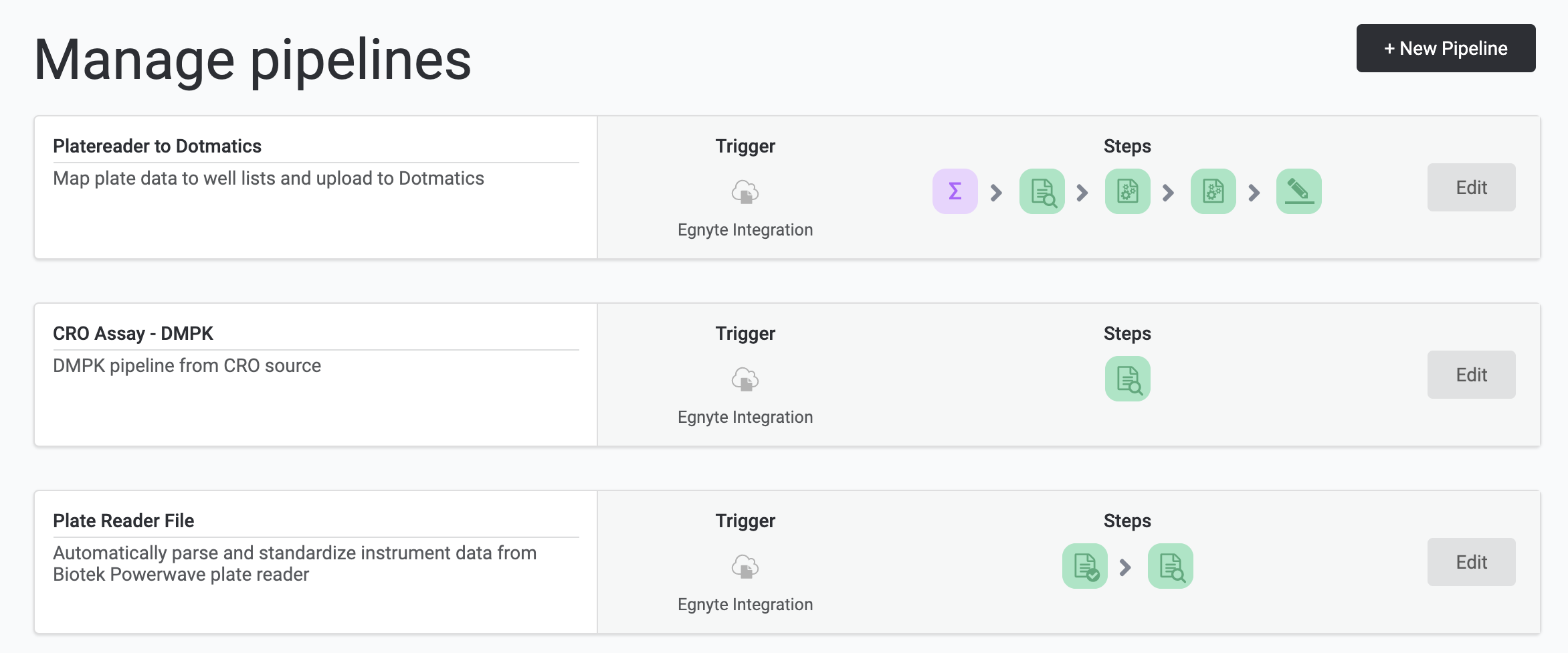

Manage Your Pipelines

All users can view the existing Pipelines by navigating to the main pipeline page via the dropdown menu.

Set up a Pipeline

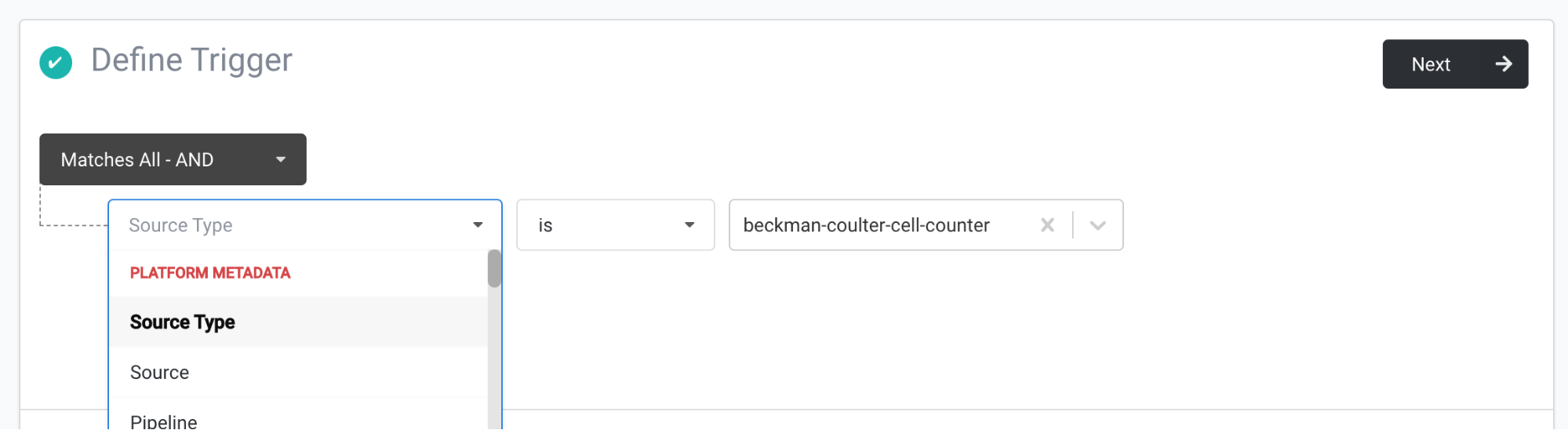

Defining trigger conditions

The trigger for a pipeline can be any boolean combination (AND/OR/NOT) of the following individual criteria:

- Data source - whether the data came from a particular agent or connector

- Source type - whether the data is associated with a specific source type identifier (each identifier corresponds to a specific type of instrument data format)

- Pipeline - whether the data was generated by a specific pipeline

- IDS type - whether the data has been associated with a certain data type after parsing and harmonization

- File path - whether the file’s path within the data lake matches a certain pattern

- File category - whether the file is

RAW(sourced directly from an instrument),IDS(harmonized JSON) orPROCESSED(auxiliary data extracted from a RAW file). - Tags - whether a file has been associated with a particular user-defined tag

- Custom metadata - whether a file has been associated user-defined metadata key-value pair

- Any custom-defined field - selected from specific IDS from "Custom Search Filters"

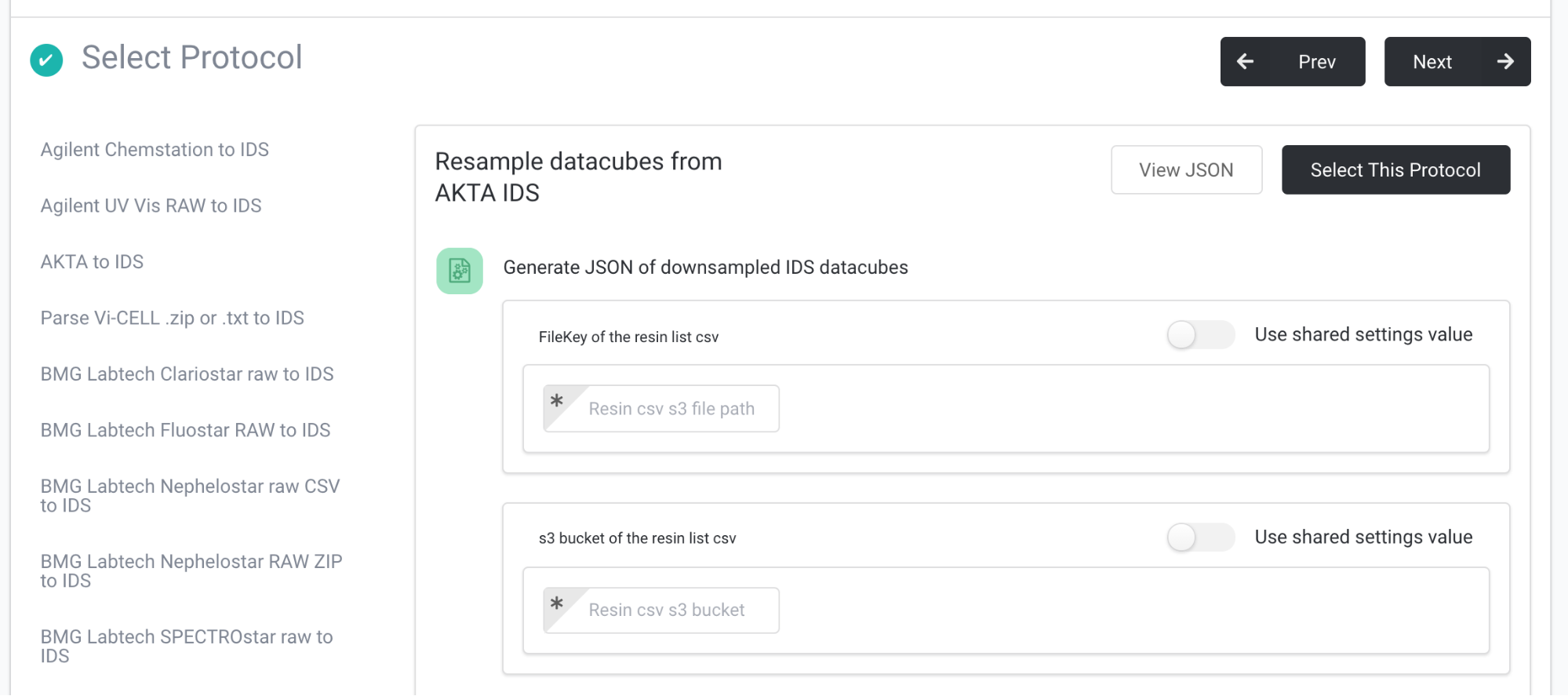

Selecting and configuring a protocol

After defining the trigger, the administrator can select a protocol to process the resulting files. A protocol may offer configuration options such as numerical thresholds, URLs or credentials. Values for these configuration options can be set per-pipeline, or managed centrally across an organization. Credentials are stored securely using AWS Secrets Manager.

TetraScience maintains a number of protocols corresponding to an instrument data format and/or a data destination. Over time, protocols may change to extract additional data, improve data modeling, or become more robust. To make sure that pipelines remain reproducible, protocols have a version number.

Updated about 2 months ago