Integration-Based Connectors

This topic provides information about integration-based connectors.

Connector Types

At the moment, we have following integration based connectors:

- HRB Cellario Connector

- SDC Connector

- IDBS E-Workbook Connector

- Solace Connector

Backup

Each integration based connector comes with SQlite database file. This file will be uploaded periodically to S3 backup bucket at following location: /{backupBucket}/{orgSlug}/datahub/{datahubId}/connector/{connectorId}/{sqlFileName}

where:

backupBucket- depends on environments (e.g. on prod, it ists-platform-prod-backuporgSlug- our organization slugdatahubId- id of the data hubconnectorId- id of the connectorsqlFileName- file name of the DB file

Backup schedule check will happen with every connector restart. Upload is done:

- if there is no backup at target S3 location

- backup file at target location is older than 24 hours

Automatic Restores

At the moment, we don't have option to automatically restore connector. However, this feature is planned for future release.

Manual Restore

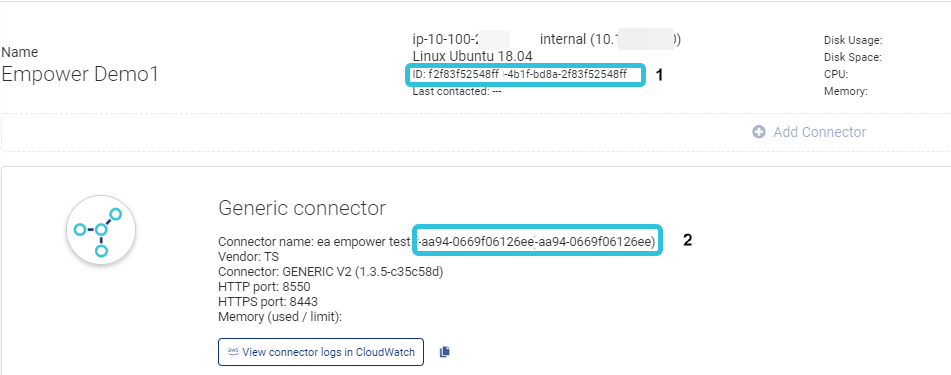

Manual restore is possible, but require several steps including manual SQLite file editing. Before proceeding with manual restore steps, it is important to get ID (UUID) of both data hub and integration/connector. ID values are marked in screenshot below (1 for data hub ID, 2 for connector ID):

ID Values

Same value can be obtained from SQlite file, and we will explain that a bit later.

Steps to restore are following:

- Download SQlite file from S3 folder

- If restore is planned on another/new hub, create a hub in platform

- Create new connector with same data (name, metadata and tags). For connection options, use incorrect address (e.g. incorrect port) in order to prevent loading data immediately after connector start.

- Take the IDs of new hub (if new one is created) and new connector

- Open backed up SQlite file in some tool that allows browsing the file and executing SQLs (e.g. DB Browser for SQlite)

- In Sqlite DB tool, turn off foreign keys. This can be done either through SQL with command

PRAGMA foreign_keys=off;or, directly through tool if tool supports that. - Take the JSON value of the

sourcescolumn ofIntegrationstable, andsourcecolumn ofEventstable from backed up SQlite file. Both values should be equal.

NOTE:source/sources column contain ID of the old hub (field

integrationIdof JSON object) and ID of the old connector (fieldidof JSON object).

- We need to update existing tables with values of the new IDs. Below is an example of SQL:

update Integrations

set id='<NEW DATAHUB ID>',

sources=json(' [{"id":"<NEW CONNECTOR ID>","integrationId":"<NEW DATAHUB ID>","name":"milos test","type":"hrb-cellario","config":{"sourceType":"hrb-cellario"},"metadata":{},"tags":[]}]' )

where id = '<OLD DATAHUB ID>';

update Events

set integration='<NEW DATAHUB ID>',

source=json(' {"id":"<NEW CONNECTOR ID>","integrationId":"<NEW DATAHUB ID>","name":"milos test","type":"hrb-cellario","config":{"sourceType":"hrb-cellario"},"metadata":{},"tags":[]} ')

where integration='<OLD DATAHUB ID>';

NOTE:This is our example of source(s) values. You have to replace source(s) values with your own values from backed up SQlite file, but with replaced hub and connector IDs.

- Revert back foreign keys, either by using SQL command:

PRAGMA foreign_keys=off;or by DB tool, if it supports - Save DB file

- Log in to the hub machine

- In shell, using sudo, or root account, replace sqlite file at location

/var/datahub/<connectorId>/sqlite.dbwith newly updated SQlite file - Go back to platform, and change connector connection settings to correct settings.

- From the upper right hub menu, invoke menu item

Update config - From the upper right hub menu, invoke menu item

Sync

Documentation Feedback

Do you have questions about our documentation or suggestions for how we can improve it? Start a discussion in TetraConnect Hub. For access, see Access the TetraConnect Hub.

NOTEFeedback isn't part of the official TetraScience product documentation. TetraScience doesn't warrant or make any guarantees about the feedback provided, including its accuracy, relevance, or reliability. All feedback is subject to the terms set forth in the TetraConnect Hub Community Guidelines.

Updated 5 months ago