TDP v4.2.3 Release Notes

Release date: 23 April 2025

TetraScience has released its next version of the Tetra Data Platform (TDP), version 4.2.3. This release provides customers the option to configure Data Access Rules for specific, named users in single sign-on (SSO) TDP environments. It also provides users with custom roles that are assigned the Developer policy permissions to publish artifacts, including protocols for self-service pipelines (SSPs).

Here are the details for what’s new in TDP v4.2.3.

SecurityTetraScience continually monitors and tests the TDP codebase to identify potential security issues. Various security updates are applied to the following areas on an ongoing basis:

- Operating systems

- Third-party libraries

Quality ManagementTetraScience is committed to creating quality software. Software is developed and tested following the ISO 9001-certified TetraScience Quality Management System. This system ensures the quality and reliability of TetraScience software while maintaining data integrity and confidentiality.

New Functionality

New functionalities are features that weren’t previously available in the TDP.

- There is no new functionality in this release.

GxP Impact AssessmentAll new TDP functionalities go through a GxP impact assessment to determine validation needs for GxP installations.

New Functionality items marked with an asterisk (*) address usability, supportability, or infrastructure issues, and do not affect Intended Use for validation purposes, per this assessment.

Enhancements and Bug Fixes do not generally affect Intended Use for validation purposes.

Items marked as either beta release or early adopter program (EAP) are not validated for GxP by TetraScience. However, customers can use these prerelease features and components in production if they perform their own validation.

Enhancements

Enhancements are modifications to existing functionality that improve performance or usability, but don't alter the function or intended use of the system.

TDP System Administration Enhancements

Configure Data Access Rules for Named Users in SSO Environments

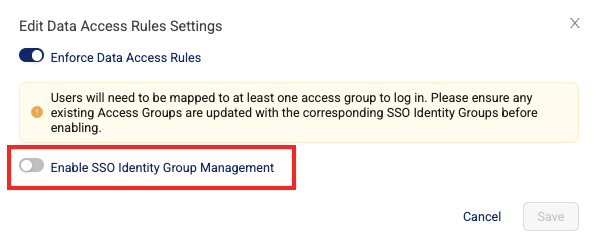

A new Enable SSO Identity Group Management setting provides customers the option to still configure Data Access Rules for specific, named users in single sign-on (SSO) TDP environments. The new setting appears on the Organizations Settings page in the Edit Data Access Rules Settings dialog.

Enable SSO Identity Group Management toggle

How It Works

When the Enable SSO Identity Group Management setting is off (the default setting), named users authenticate into the TDP through SSO (if SSO is configured). However, access group membership is still managed through the TDP, outside of the identity provider (IdP).

When the Enable SSO Identity Group Management setting is on, access group membership is managed through any identity providers (IdPs) mapped to the group (SSO IDENTITY GROUPS).

For more information, see Configure Data Access Rules for an Organization.

IMPORTANTFor Customers That Have SSO Configured and Data Access Rules Activated Before Upgrading to TDP v4.2.3 or Higher

If your tenant has SSO configured and data access rules activated before upgrading to TDP v4.2.3 or higher, first configure SSO identity groups for each of your access groups that have at least one user in the group. Then, turn on the Enable SSO Identity Group Management setting. This prevents locking out existing SSO users.

Previous Behavior (TDP v4.2.2)

In TDP v4.2.2, customers that had single SSO configured could assign multiple IdPs to specific access groups by using the new SSO IDENTITY GROUPS field when creating or editing an access group. However, after SSO identity groups were assigned to access groups and Data Access Rules were activated for an organization, access group membership could only be managed through the mapped, third-party IdPs.

Users Assigned a Developer Policy Can Now Publish Artifacts

The Developer policy now provides permissions to publish artifacts, including protocols for self-service pipelines (SSPs), to the private namespace. In TDP v4.2.2 and earlier, only the Administrator role or custom roles with either Organization Admin or Tenant Admin policy permissions could publish artifacts.

For more information, see Developer Policy Permissions.

Infrastructure Updates

There are no infrastructure changes in this release.

Bug Fixes

There are no bug fixes in this release.

Deprecated Features

There are no new deprecated features in this release.

For more information about TDP deprecations, see Tetra Product Deprecation Notices.

Known and Possible Issues

The following are known and possible issues for TDP v4.2.2.

Data Harmonization and Engineering Known Issues

- On the Pipeline Edit page, in the Retry Behavior field, the Always retry 3 times (default) value sometimes appears as a null value.

- File statuses on the File Processing page can sometimes display differently than the statuses shown for the same files on the Pipelines page in the Bulk Processing Job Details dialog. For example, a file with an

Awaiting Processingstatus in the Bulk Processing Job Details dialog can also show aProcessingstatus on the File Processing page. This discrepancy occurs because each file can have different statuses for different backend services, which can then be surfaced in the TDP at different levels of granularity. A fix for this issue is in development and testing. - Logs don’t appear for pipeline workflows that are configured with retry settings until the workflows complete.

- Files with more than 20 associated documents (high-lineage files) do not have their lineage indexed by default. To identify and re-lineage-index any high-lineage files, customers must contact their CSM to run a separate reconciliation job that overrides the default lineage indexing limit.

- OpenSearch index mapping conflicts can occur when a client or private namespace creates a backwards-incompatible data type change. For example: If

doc.myFieldis a string in the common IDS and an object in the non-common IDS, then it will cause an index mapping conflict, because the common and non-common namespace documents are sharing an index. When these mapping conflicts occur, the files aren’t searchable through the TDP UI or API endpoints. As a workaround, customers can either create distinct, non-overlapping version numbers for their non-common IDSs or update the names of those IDSs. - File reprocessing jobs can sometimes show fewer scanned items than expected when either a health check or out-of-memory (OOM) error occurs, but not indicate any errors in the UI. These errors are still logged in Amazon CloudWatch Logs. A fix for this issue is in development and testing.

- File reprocessing jobs can sometimes incorrectly show that a job finished with failures when the job actually retried those failures and then successfully reprocessed them. A fix for this issue is in development and testing.

- File edit and update operations are not supported on metadata and label names (keys) that include special characters. Metadata, tag, and label values can include special characters, but it’s recommended that customers use the approved special characters only. For more information, see Attributes.

- The File Details page sometimes displays an Unknown status for workflows that are either in a Pending or Running status. Output files that are generated by intermediate files within a task script sometimes show an Unknown status, too.

Data Access and Management Known Issues

- When customers upload a new file on the Search page by using the Upload File button, the page doesn’t automatically update to include the new file in the search results. As a workaround, customers should refresh the Search page in their web browser after selecting the Upload File button. A fix for this issue is in development and testing and is scheduled for a future TDP release.

- If an IDS file has a field that ends with a backslash

\escape character, the file can’t be uploaded to the Data Lakehouse. These file ingestion failures happen because escape characters currently trigger the Lakehouse data ingestion rules. The errors are recorded on the Files Health Monitoring dashboard in the File Failures section. There is no way to reconcile these file failures at this time. A fix for this issue is in development and testing and is scheduled for a future release. - Values returned as empty strings when running SQL queries on SQL tables can sometimes return

Nullvalues when run on Lakehouse tables. As a workaround, customers taking part in the Data Lakehouse Architecture EAP should update any SQL queries that specifically look for empty strings to instead look for both empty string andNullvalues. - The Tetra FlowJo Data App doesn’t load consistently in all customer environments.

- Query DSL queries run on indices in an OpenSearch cluster can return partial search results if the query puts too much compute load on the system. This behavior occurs because the OpenSearch

search.default_allow_partial_resultsetting is configured astrueby default. To help avoid this issue, customers should use targeted search indexing best practices to reduce query compute loads. A way to improve visibility into when partial search results are returned is currently in development and testing and scheduled for a future TDP release. - Text within the context of a RAW file that contains escape (

\) or other special characters may not always index completely in OpenSearch. A fix for this issue is in development and testing, and is scheduled for an upcoming release. - If a data access rule is configured as [label] exists > OR > [same label] does not exist, then no file with the defined label is accessible to the Access Group. A fix for this issue is in development and testing and scheduled for a future TDP release.

- File events aren’t created for temporary (TMP) files, so they’re not searchable. This behavior can also result in an Unknown state for Workflow and Pipeline views on the File Details page.

- When customers search for labels in the TDP UI’s search bar that include either @ symbols or some unicode character combinations, not all results are always returned.

- The File Details page displays a

404error if a file version doesn't comply with the configured Data Access Rules for the user.

TDP System Administration Known Issues

- If an Organization Admin tries to generate SQL credentials for a TDP login user that doesn’t have SQL Search permissions, one of the following error messages appears:

- Failed to generate SQL credentials for {name}

- Failed to rotate SQL access credentials!

Neither of these error messages indicate what’s causing the error. To resolve the issue, customers must apply the SQL User policy to the TDP user’s custom role. A fix for this issue is planned for a future release.

- The latest Connector versions incorrectly log the following errors in Amazon CloudWatch Logs:

Error loading organization certificates. Initialization will continue, but untrusted SSL connections will fail.Client is not initialized - certificate array will be empty

These organization certificate errors have no impact and shouldn’t be logged as errors. A fix for this issue is currently in development and testing, and is scheduled for an upcoming release. There is no workaround to prevent Connectors from producing these log messages. To filter out these errors when viewing logs, customers can apply the following CloudWatch Logs Insights query filters when querying log groups. (Issue #2818)

CloudWatch Logs Insights Query Example for Filtering Organization Certificate Errors

fields @timestamp, @message, @logStream, @log | filter message != 'Error loading organization certificates. Initialization will continue, but untrusted SSL connections will fail.' | filter message != 'Client is not initialized - certificate array will be empty' | sort @timestamp desc | limit 20 - If a reconciliation job, bulk edit of labels job, or bulk pipeline processing job is canceled, then the job’s ToDo, Failed, and Completed counts can sometimes display incorrectly.

Upgrade Considerations

During the upgrade, there might be a brief downtime when users won't be able to access the TDP user interface and APIs.

After the upgrade, the TetraScience team verifies that the platform infrastructure is working as expected through a combination of manual and automated tests. If any failures are detected, the issues are immediately addressed, or the release can be rolled back. Customers can also verify that TDP search functionality continues to return expected results, and that their workflows continue to run as expected.

For more information about the release schedule, including the GxP release schedule and timelines, see the Product Release Schedule.

For more details on upgrade timing, customers should contact their CSM.

Other Release Notes

To view other TDP release notes, see Tetra Data Platform Release Notes.

Updated about 2 months ago