Use Third-Party Tools to Connect to Athena SQL Tables

Amazon Athena is an interactive query service that allows you to analyze data in the Tetra Data Lake or Data Lakehouse using standard SQL. To transform and run SQL queries on your Tetra Data using third-party tools, you must first connect the tool to Amazon Athena by downloading, installing, and configuring a Java Database Connectivity (JDBC) or Open Database Connectivity (ODBC) driver.

For more information, see Connect to Amazon Athena with ODBC and JDBC drivers in the AWS Documentation.

IMPORTANT

If you're using a third-party tool to query Athena tables, you must declare which database that you want to connect to up front.

To declare your database up front, make sure that you use the table names as they appear in the TDP. If you don't declare the database up front, then you must add the database name in your query in the following format:

org_slug.tablename(for example:demo_uat.lcuv_empower_v2_injection).

NOTE FOR CUSTOMER-HOSTED DEPLOYMENTS

For customer-hosted Tetra Data Platform (TDP) deployments, keep in mind the following:

- SQL credentials aren't provided on the SQL Access page if the deployment parameter

AthenaCreateIamUseris set tofalse. Instead, you'll need to provide the required SQL credentials for the JDBC or ODBC driver connection through an AWS Identity and Access Management (IAM) user or role that's assigned the following IAM policy:ts-platform-<awsRegion>-production-<orgSlug>-policy.- Using the Amazon Athena JDBC or ODBC driver will result in additional costs in your AWS account, because the driver runs Amazon Athena queries. Query costs depend on the amount of data stored in your environment. For more information, see Amazon Athena Pricing in the AWS documentation.

What Driver Should I Use?

If you're connecting to a Java-based application, such as Tableau or Java Spring, it's recommended that you use a JDBC driver to help improve query performance. For applications that don't use Java, such as JMP Statistical Software, use an ODBC driver.

For installation instructions, see the following in the AWS documentation:

For more information about setting up an ODBC driver, including guidance on how to test the connection, see Amazon Athena ODBC Driver Configuration and SQL Query Test in the TetraConnect Hub. For access, see Access the TetraConnect Hub

NOTE

For JDBC drivers, make sure that you select the driver that works with your current Java version (for example, JDK 8.0 or JDK 9.0).

SQL Credentials

When you configure the JDBC or ODBC driver in either a custom application or a third-party SQL client, you must enter the following SQL Credentials from the TDP as connection parameters.

To get your organization's org-level SQL credentials as a system administrator, see Get Org-Level Credentials to Query SQL Tables in Third-Party Applications.

To get SQL credentials as a non-Admin user, see Get Credentials to Query SQL Tables in Third-Party Applications.

JDBC Driver Connection Parameter Requirements

For the JDBC driver's connection parameters, you must enter the following:

- For User, enter your TDP ACCESS KEY Athena credential value.

- For Password, enter your TDP SECRET KEY Athena credential value.

- For SessionToken, leave the field blank.

- For Output Location, enter your S3OUTPUTLOCATION Athena credential value.

- For Workgroup, enter your organization's slug (the same as your

<orgSlug>value).

IMPORTANT

SQL queries fail if the

WorkGroupconnection parameter isn't configured as a validorgSlugvalue. You must enter your org slug as the workgroup in which queries will run, even though it's listed as an optional parameter in the AWS documentation.

For more information about other JDBC driver connection parameters that aren't required, see Amazon Athena JDBC 3.x connection parameters in the AWS documentation.

ODBC Driver Connection Parameter Requirements

For the ODBC driver's connection parameters, you must enter the following:

- For AuthenticationType, enter IAM Credentials.

- For User ID, enter your TDP ACCESS KEY Athena credential value.

- For Password, enter your TDP SECRET KEY Athena credential value.

- For SessionToken, leave the field blank.

- For S3 Output Location, enter your S3OUTPUTLOCATION Athena credential value.

- For Workgroup, enter your organization's slug (the same as your

<orgSlug>value).

IMPORTANT

SQL queries fail if the

WorkGroupconnection parameter isn't configured as a validorgSlugvalue. You must enter your org slug as the workgroup in which queries will run, even though it's listed as an optional parameter in the AWS documentation.

For more information about other ODBC driver connection parameters that aren't required, see Athena ODBC 2.x connection parameters in the AWS documentation.

Example Connection Types

The following are example connection setups for common third-party analytics tools:

Security

Every organization on the TDP is automatically provisioned with a separate AWS KMS (Key Management Service) Key. The query result is encrypted using SSE_KMS (The driver uses server-side encryption with an AWS KMS-management Key).

The JDBC and ODBC driver automatically applies Secure Sockets Layer (SSL)/Transport Layer Security (TLS) encryption to all connections. SSL/TLS encryption protects data and credentials when they are transferred over the network, and provides stronger security than authentication alone.

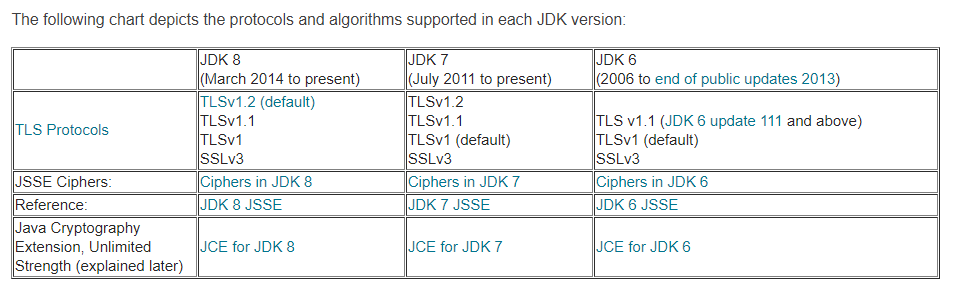

The TLS version that the JDBC driver supports depends on which Java virtual machine (JVM) version is installed. The TLS version used for the connections is the latest version that is supported by both the driver and the server.

Supported Protocols and Algorithms

Reference: Oracle Blog: Diagnosing TLS, SSL, and HTTPS

Learn More

For more information and best practices, see Data Analytics in the TetraConnect Hub. To request access, see Access the TetraConnect Hub.

Documentation Feedback

Do you have questions about our documentation or suggestions for how we can improve it? Start a discussion in TetraConnect Hub. For access, see Access the TetraConnect Hub.

NOTE

Feedback isn't part of the official TetraScience product documentation. TetraScience doesn't warrant or make any guarantees about the feedback provided, including its accuracy, relevance, or reliability. All feedback is subject to the terms set forth in the TetraConnect Hub Community Guidelines.

Updated 3 days ago