Waters Empower Data Science Link (EDSL)

The TetraScience Waters Empower Data Science Link (EDSL) is now available on the Tetra Data Platform (TDP). EDSL is compatible with Empower 3 Service Release 2 or higher.

EDSL extracts data from the Waters Empower 3 Acquisition Server to produce continuous, high-quality chromatography analytics that scientists can use to improve quality control, method development, performance and distribution, and other R&D tasks. Specific use cases include:

- Instrument Utilization

- SOP Adherence & Data QC

- Shelf life and Stability

- System Suitability

- Column Performance

- Chromatogram Overlay

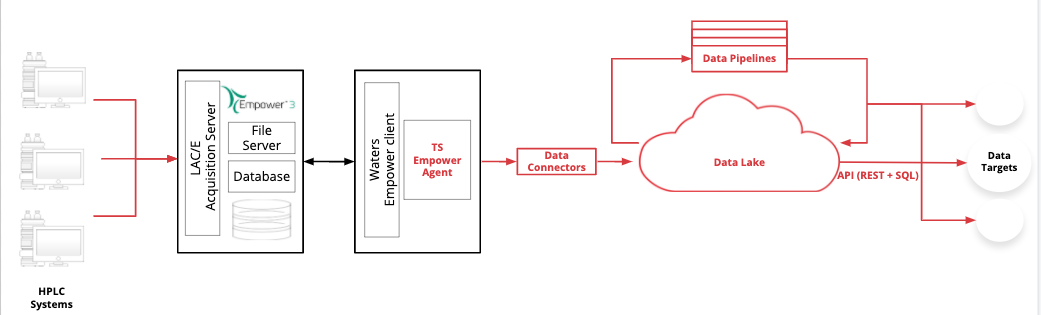

Waters EDSL High Level Overview

EDSL Components

The EDSL consists of several components:

- One pipeline (RAW to IDS to harmonize the data)

- One Tetra Empower Agent per Waters Empower Acquisition Server

- A Subset of TDP Features that are essential for EDSL

EDSL also includes access to REST API and a JDBC connection that allows scientists to import data into tools such as TIBCO Spotfire, Tableau, R Studio, and Jupyter.

Operations

HPLC systems send their data to the Waters Empower Acquisition Server. The Waters Empower Client retrieves data from the Acquisition Server and interfaces with the Tetra Empower Agent, which is a lightweight, windows-based application that extracts data based on a single injection (run).

EDSL Diagram

The Tetra Empower Agent can be configured to specify:

- which project(s) to extract data from,

- when and how often data are extracted,

- batch size,

- whether include injection data results that have not been signed off,

- whether to auto-detect an injection change and automatically generate a new RAW file for upload to the data lake,

- where to store the data output,

- whether to automatically upload the data to the data lake,

- how often to retry processing if there is a failure.

Once these options have been configured and the connections have been established, A copy of Empower injection data that is associated with a project is extracted from the Waters Empower 3 Application Server as indicated in the Tetra Empower Agent configuration settings. They are then securely uploaded to the data lake using one of the Tetra Data options and are converted to a standardized JSON format using a pipeline that converts transforms the data so that it conforms with the Empower Intermediate Data Schema (IDS) format.

You can view the status of the extraction, processing, and file uploads from the Tetra Empower Agent Summary window; you can also address track and address errors there as well.

After the files are uploaded to the data lake, you can log into the TDP to view details about the schema, as well as operational details. You can search the JSON files or the final results using the TDP’s web interface. You can preview content or download file information.

You can also use an API or JDBC to connect to the TDP and get information about uploaded files. File data can be visualized and analyzed using standard Business Intelligence, Data Science, or tools such as R Studio, TIBCO Spotfire, Tableau, Jupyter, and more.

Terminology

Terms associated with EDSL appear in the following table.

Terminology | Definition |

|---|---|

Tetra Empower Agent | A high-performance, Microsoft Windows-based application to extract injection data from Waters Empower 3 and upload it to the Tetra Data Lake. The Tetra Empower Agent has an interactive user interface that allows you to perform the following tasks:

|

Empower 3 | Waters' compliance-ready Chromatography Data Software. |

Empower Acquisition Server | Server that performs instrument control, data acquisition, remote processing, and data buffering activities – while providing enhanced raw data security, robust remote access to instruments, and enhanced system performance. The Tetra Empower Agent does not interact with the Acquisition Server, but interacts with the Empower Client instead. |

Empower Client | Monitors data acquisition, access and process data, use methods, and process results. Empower Client's connect to the Empower Acquisition Server. The Tetra Empower Agent software is installed on an Empower client host machine and interfaces with it to get copies of the data for processing. |

Empower Toolkit | A simple and powerful means of programmatically accessing key data structures and functionality in the Empower software. Knowledge of the Empower Toolkit is not needed to use the EDSL. |

Tetra Data Hub and Data Connector | Applications that facilitate secure data transfer from Tetra Empower Agent to Tetra Data Lake. |

Tetra Data Lake | Centralized repository that is designed to store both unstructured and structured data. The Tetra Data Lake is built on Amazon AWS S3 and leverages the features of that platform. |

Tetra Data Platform | Provides a centralized place where you can process and view scientific data from instruments, CRO/CMOs, and software systems in a single, cloud-based repository. It also provides a flexible and powerful data pipeline system that you can use to transform your RAW files to Intermediate Data Schema (IDS) format. |

Tetra Data Pipeline | A group of programs that allow you to configure a set of actions to happen automatically each time new data when it is ingested into the data lake. Files are processed by pipelines if they meet the criteria you specify. A pipeline consists of a trigger, a protocol, notification, and details/settings. |

Intermediate Data Schema (IDS) | Designed by TetraScience in collaboration with instrument manufacturers, scientists, and informatics teams from Life Sciences companies - IDS's harmonize different data sets such as instrument data, CRO assay data, and software data. IDS and pipelines do the work of extracting and transforming data from various sources into a predictable, consistent, and vendor-agnostic schema so bench scientists, data scientists, and others can easily consume data in their applications such as TIBCO Spotfire or Tableau. Searches and aggregations are automatically prepared and make it easy to feed the data into visualization/analysis software seamlessly. |

Generic Data Connector (GDC) | Software running on the Data Hub machine in a Docker container. The GDC exposes RESTful APIs for Tetra Agents to upload data and logs and checks the Agent's authentication and authorization to transmit Agent data and logs to the Data Lake. It's called "generic" because all different kinds of Agents can use it, e.g. UNICORN Agent, Empower Agent, File Log Agent etc. |

User Defined Intergration (UDI) | Software running on the TDP. Like the GDC, the UDI exposes RESTful APIs for Tetra Agents to upload data and logs and checks the Agent's authentication and authorization to transmit Agent data and logs to the Data Lake. The UDI requires internet access to work. The GDC does not, but should run on a Datahub, which should be installed on a machine that does have internet access. |

Updated 3 months ago