(Version 3.0.x and earlier) Searching the Tetra Data Lake

NOTE:This article explains how to use the Search User Interface in the Tetra Data Platform, versions 3.0.x and below. If you are using the Tetra Data Platform, version 3.1.x, see this topic instead.

TetraScience DataLake search is powered by Elasticsearch. You can access your data not only by using TetraScience web API, but also by using the search UI.

The search UI provides an easy way for you to search data quickly. You can search by entering texts in the search bar, and you can refine your search using search filters.

Search Bar

Search Bar

You can type any text in the search bar and get matching results displayed. The mechanism behind the search bar utilizes Elasticsearch's query_string query with some fine tweaks.

If you are not familiar with ElasticsearchDon't feel any rush to study Elasticsearch's documentation, as we provide a few examples in the table below that should cover most common searches.

If you require more advanced searches refer to the Amazon Elasticsearch documentation.

How do I find the field I want to search for?Select a file on the search file page and click "Preview" in the lower right corner. You will see a file with JSON format. If the field is like this { data: { sample: { id: "fake-id1" } } }, then you will type data.sample.id:"fake-id1" with the quotes into the search bar.

How to Search?

You can start searching right away by entering text in the search bar. Just remember:

If you are searching without specifying a field, the value is case-insensitive.

If you are searching for a specific field, the value you provide must be the exact value and it's case-sensitive.

Search Text | Search every doc that... | Notes |

|---|---|---|

word1 |

| case-insensitive |

word1 word2 |

| case-insensitive |

word1 OR word2 |

| case-insensitive |

word1 AND word2 |

| case-insensitive |

word1 AND NOT word2 |

| case-insensitive |

"word1 word2" |

| case-insensitive. Note the behavior will change if a specific field is provided |

data.sample.id: id1 | field "data.sample.id" is exactly "id1" | case-sensitive |

metadata.compound\ id : TS14224012 | field "metadata.compound id" is exactly TS14224012 | Since "compound id" has a space in the name it is necessary to escape ("\ ") the space. |

data.sample.id:"fake-id1" | field "data.sample.id" is exactly | case-sensitive |

source.type:"empower" AND data.sample.id:id1 | "source.type" is exactly "empower" and sample ID is exactly "id1" | case-sensitive |

| where the field "source.type" has any non-null value |

Plain Text Processing

Plain text entered into the TetraScience search bar is analyzed using ElasticSearch's Standard Tokenizer. Word boundaries are determined based on the

Unicode Text Segmentation algorithm, as specified in Unicode Standard Annex #29.

This means searches with spaces, hyphens, '+', and some other common symbols are broken down into terms, however underscores are not. For example, the following sentence:

The 2 QUICK Brown-Foxes jumped_over the lazy dog's bone.is broken down into the following terms:

[ The, 2, QUICK, Brown, Foxes, jumped_over, the, lazy, dog's, bone ]This may make exact-match searches unpredictable (ex: when trying to match an exact ID that includes hyphens). Consider searching for the following UUID:

576fd742-c1a6-4fb4-9ecb-398d53e4addbThis matches any data including:

"576fd742", "c1a6", "4fb4", "9ecb", "398d53e4addb"When querying for an exact match for such a value, consider wrapping the search string with quotes like this:

"576fd742-c1a6-4fb4-9ecb-398d53e4addb"This will cause ElasticSearch to ignore word boundaries and produce a more appropriate result.

Keep in mind, this behavior exists for "free-text" searches only. Searches on exact fields are analyzed based on that particular field's type. For example, the following query will use the Keyword tokenizer, as this field is a keyword type:

source.type.executionId: 576fd742-c1a6-4fb4-9ecb-398d53e4addbBy default, the "Keyword" tokenizer does not adhere to the same word boundaries rules as the "Standard" tokenizer. An exact-match query without quotations will work as expected.

Nested Types

The search bar doesn't reliably support queries on fields of the "Nested" type. Support for these queries is in the works, and will primarily be facilitated using the "filters" feature.

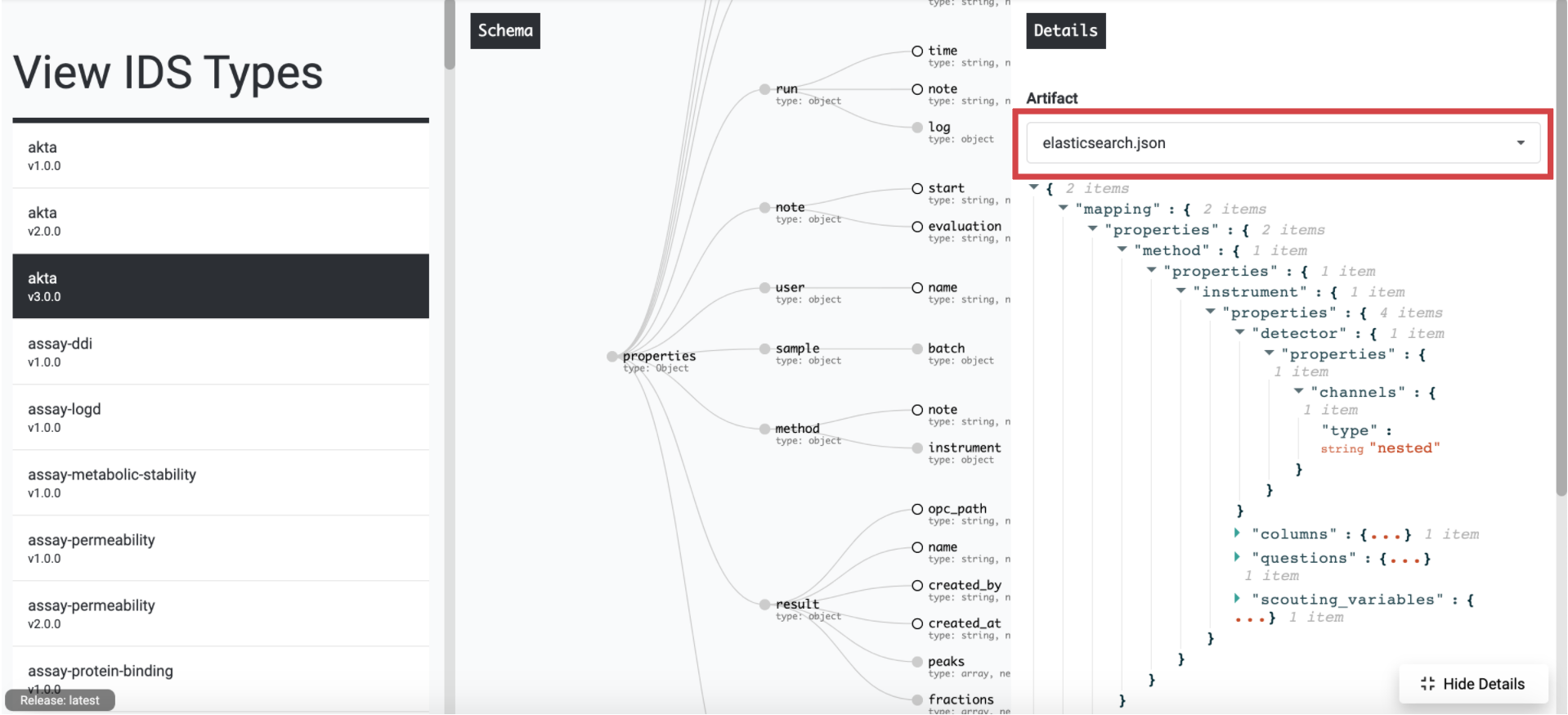

The best way to determine if a field is nested is through the IDS Schema Viewer http://platform.tetrascience.com/schemas.

After selecting a schema, select the "elasticsearch.json" artifact from the artifacts dropdown on the right. The JSON file will show which fields are mapped as a nested type.

Standard queries will not work for these fields. Additional details about the Nested datatype: https://www.elastic.co/guide/en/elasticsearch/reference/current/nested.html

Wildcard

* and ? are used as wildcards. They can be applied to both field key or value.

qu?ck | "?" can be any character. contains the word that stars with "qu" plus one character plus "ck" in any field | case-insensitive. matches "quick", "quack" etc. |

science* |

| case-insensitive |

qu?ck OR science* |

| case-insensitive |

data.\*:(quick OR brown) | any field key that starts with data. that has exactly "quick" or "brown" | case-sensitive. matches data like "data.id: quick" |

Grouping

() Parentheses are used to group words or operations

word1 AND (word2 OR word3) | case-insensitive.

|

status:(active OR pending) title:(full text search) | case-sensitive. |

Range

Ranges can be specified for date, numeric or string fields. Inclusive ranges are specified with square brackets ly brackets and exclusive ranges with curly brackets {min TO max}.

All dates in 2012 | |

count::callout | Numbers 1..5 |

tag:{alpha TO omega} | Tags between alpha and omega, excluding alpha and omega |

count::callout] | Numbers from 10 upwards |

date:{* TO 2012-01-01} | Dates before 2012 |

count:[1 TO 5} | Numbers from 1 up to but not including 5 |

Reserved charactersIf you need to use any of the characters which function as operators in your query itself (and not as operators), then you should escape them with a leading backslash. For instance, to search for (1+1)=2, you would need to write your query as \(1\+1\)\=2.

The reserved characters are: + - = && || > < ! ( ) { } [ ] ^ " ~ * ? : \ /

the escape characters are dropped in the webview, and should also be escaped.

For instance, to search for (1+1)=2, you would need to write your query as \(1\+1\)\=2.

Updated about 1 month ago