TDP v4.3.2 Release Notes

Release date: 21 August 2025

TetraScience has released its next version of the Tetra Data Platform (TDP), version 4.3.2. This release introduces new options for optimizing compute costs and data latency for customers using the Tetra Data Lakehouse Architecture. It also includes a new ability to configure Tetra Data Pipelines to run at specific, recurring times, along with several helpful UI improvements and bug fixes.

Here are the details for what’s new in TDP v4.3.2.

New Functionality

New functionalities are features that weren’t previously available in the TDP.

GxP Impact AssessmentAll new TDP functionalities go through a GxP impact assessment to determine validation needs for GxP installations.

New Functionality items marked with an asterisk (*) address usability, supportability, or infrastructure issues, and do not affect Intended Use for validation purposes, per this assessment.

Enhancements and Bug Fixes do not generally affect Intended Use for validation purposes.

Items marked as either beta release or early adopter program (EAP) are not validated for GxP by TetraScience. However, customers can use these prerelease features and components in production if they perform their own validation.

Data Access and Management

Select What Lakehouse Tables are Generated and When*

Customers using the Data Lakehouse Architecture can now better control compute costs while optimizing data latency for their use case by selecting what Lakehouse tables are generated, and at what frequency. The new Normalized IDS and Normalized IDS Frequency options are available in the ids-to-lakehouse protocol configuration. This change doesn’t impact customers' existing search functionality.

Lakehouse tables are still created by default when customers run ids-to-lakehouse pipelines. Normalized Lakehouse tables are now optional. These tables transform IDS Lakehouse tables from the new, nested Delta Table structure to a normalized structure that mirrors the legacy Amazon Athena table structure. Existing configurations are not impacted by default, but can be modified.

For more information, see Create an ids-to-lakehouse Pipeline.

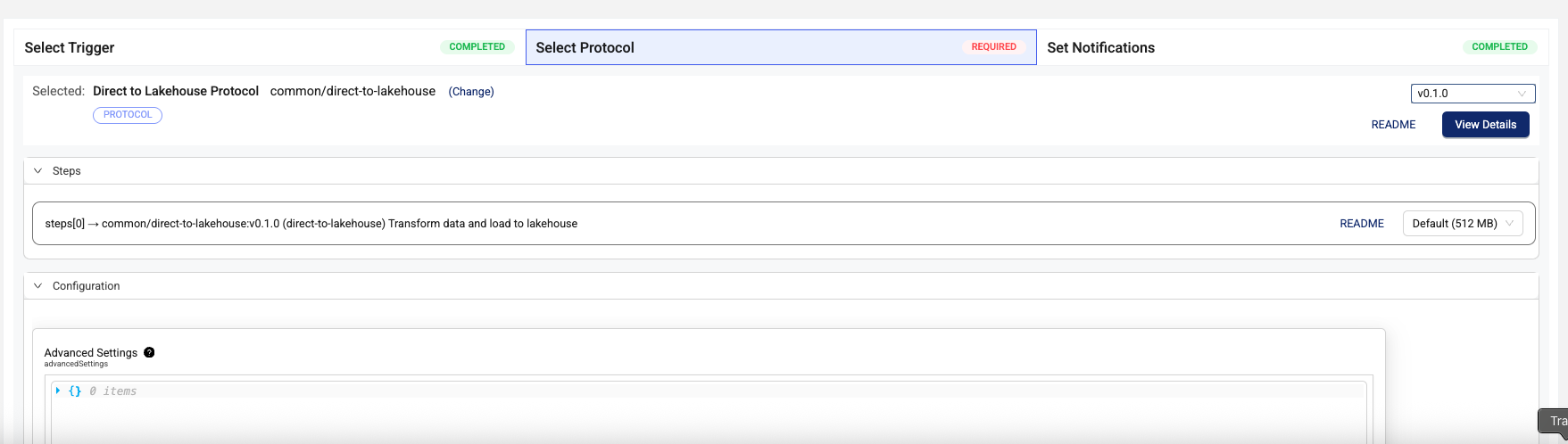

Ingest Reference Data that’s Not File Based Directly into the Data Lakehouse*

To help speed up time-to-insights for structured data that’s not file based (for example, time series data), customers can now ingest it directly into the Data Lakehouse by using the new direct-to-lakehouse protocol in a pipeline. Previously, this data needed to first be uploaded to the Tetra Data Lake and harmonized into an Intermediate Data Schema (IDS) file before it could be converted to Lakehouse tables. Now, the Lakehouse table defines the data schema.

For more information, see Convert Data to Lakehouse Tables.

NOTEThe

direct-to-lakehouseprotocol doesn't currently handle file deletion events. If an input file is deleted, the corresponding data in the output Lakehouse table isn’t deleted. This functionality is planned for TDP v4.4.0.

Enhancements

Last updated: 22 August 2025

Enhancements are modifications to existing functionality that improve performance or usability, but don't alter the function or intended use of the system.

Data Integration Enhancements

New Tetra File-Log Agent Default Label for Instrument Serial Numbers

A new instrument_serial_number default label was added to the Tetra File-Log Agent Path Configuration Page. The new label provides the ability for customers to specify an instrument serial number label for any new files acquired from the configured path.

For more information, see Path Configuration Options.

New Tetra OpenLab Agent Option and Source Type

NOTEAdded on 22 August 2025

Adding the infrastructure required to support creating Tetra OpenLab Agents in the TDP UI is now scheduled for the next TDP release.

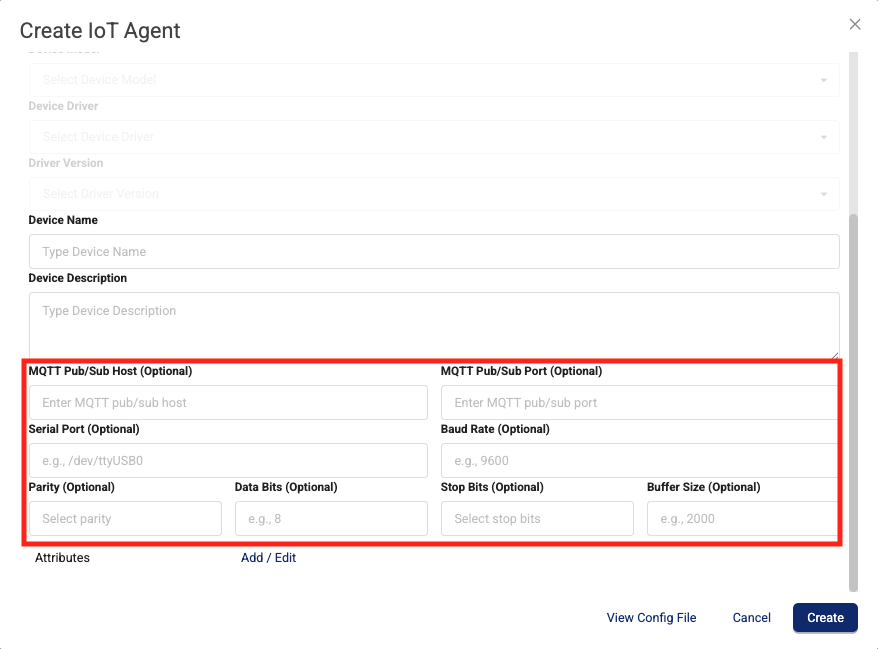

Improved Tetra IoT Agent Configuration

The following options for adjusting the XML configuration that the Tetra IoT Agent sends to its edge device (the Tetra IoT Agent Box) are now available in the TDP UI:

-

Routing the IoT traffic through a proxy server

-

Changing the serial communication parameters

Previously, updating these configurations required copying and hand-modifying the configuration file.

For more information, see Tetra IoT Agent Cloud Setup and Management.

Data Harmonization and Engineering Enhancements

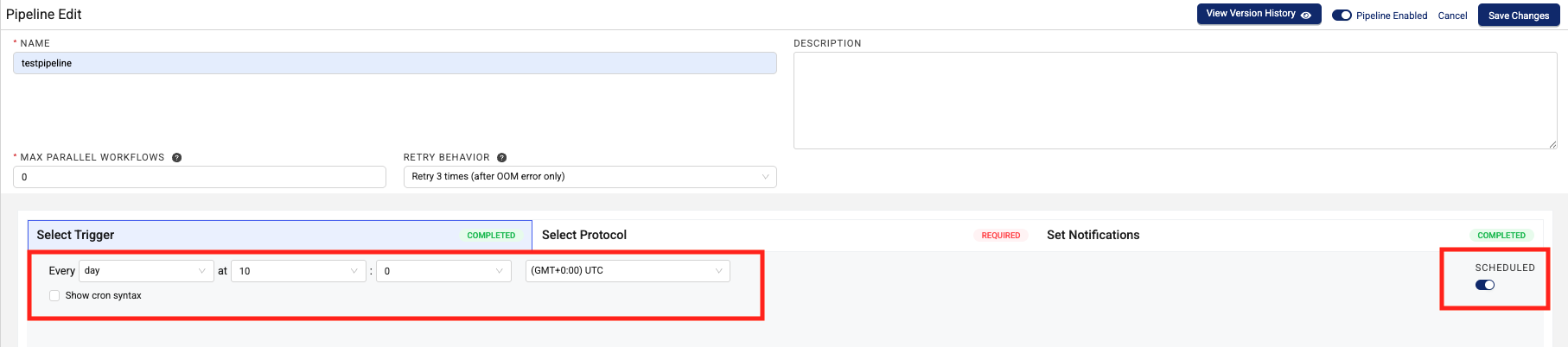

Scheduled Triggers for Protocols Not Triggered by Files

Pipelines that use protocols which aren’t triggered by file uploads (for example, python-exec) now support scheduled triggers. This trigger type allows Tetra Data Pipelines to run at specific, recurring times. Existing file-triggered protocols require a specific file to start processing and cannot run on a schedule by default. For a protocol to use a scheduled trigger, its task script must not depend on the context.input_file property in the Context API.

For more information, see Select Trigger Conditions in Set Up and Edit Pipelines.

NOTEThe Select Protocol section on the Pipeline Edit page displays all protocols if customers select the SCHEDULED trigger option. However, existing file-triggered protocols require a specific file to start processing and cannot run on a schedule by default.

Lower Latency Instant Starts for Node-Based Pipelines

To help support latency-sensitive lab data automation use cases, customers can now use the Instant Start pipeline execution mode when configuring Node-based Tetra Data Pipelines, such as the empower-raw-to-ids protocol. This enhancement applies to TDP v4.3.x and higher. Previously, the Instant Start option was available to Python-based pipelines only.

The Instant Start compute type offers ~1-second startup time after the initial deployment, regardless of how recently the pipeline was run. Customers can also select from a range of instance sizes in this new compute class to handle data at the scale their use case requires. There’s little to no additional cost impact.

For more information, see Memory and Compute Settings.

System Administration Enhancements

More Accurate Health Monitoring App Loading Screen Time Estimate

When installing or upgrading the Health Monitoring App, the loading screen now shows that the app may take up to 10 minutes to initialize. The previous estimate was five minutes. This new estimate more accurately reflects how long it takes to install or upgrade the latest app versions (v1.x and higher).

Infrastructure Updates

New VPC Endpoints for Customer-Hosted Deployments

For customer-hosted environments, if the private subnets where the TDP is deployed are restricted and don’t have outbound access to the internet, then the VPC now needs the following AWS Interface VPC endpoints enabled:

- (For activating the Data Lakehouse Architecture)

com.amazonaws.<REGION>.kinesis-firehose: enables Amazon Data Firehose, which is used to deliver real-time streaming data. - (For activating the Tetra Data and AI Workspace)

com.amazonaws.<REGION>.elasticfilesystem: enables Amazon Elastic File System (Amazon EFS), which provides serverless, fully elastic file storage.

These new endpoints must be enabled to activate the associated services, along with the other required VPC endpoints.

For more information, see VPC Endpoints and AWS Services.

Bug Fixes

Data Integrations Bug Fixes

- When configuring Tetra File-Log Agent paths in the TDP user interface, the following bugs are now fixed:

- When clearing a filter action, the Labels field's Value dropdown now displays a correct list of customers' available labels for new scan paths.

- To prevent data duplication when Archive is enabled, the Archive path can no longer be configured as a subdirectory of the watched path. This change doesn’t affect Archive validation through the TetraScience API.

Data Access and Management Bug Fixes

- Users that have Data User policy permissions can now access and use Tetra Data Apps.

- Saved Search names now block users from using invalid characters (double consecutive spaces and periods,

<,>,:,",/,\,|,?, and*). - Label values in the Create Bulk Label Change Job dialog now populate correctly.

Deprecated Features

The following features have been deprecated for this release or are now on a deprecation path.

Beta Release and EAP Feature Deprecations

Embedded Data Apps Based on Windows Are Now Deprecated

Embedded Data Apps based on Windows are now deprecated, in favor of the more cost-effective Data Sync Utility. Customers using any of these EAP apps should transition to using the Data Sync Utility instead (currently in Beta).

The Data Sync Utility enables seamless transfer of primary scientific data from laboratory instruments and control software to and from desktop analytical applications.

The following Embedded Data Apps based on Windows are no longer supported:

With this change, Amazon AppStream 2.0 is no longer a required AWS service for customers activating the Tetra Data & AI Workspace.

For more information, see the Embedded Data Apps Based on Windows Deprecation Notice.

For more information about TDP deprecations, see Tetra Product Deprecation Notices.

Known and Possible Issues

The following are known and possible issues for the TDP v4.3.2 release.

Data Integrations Known Issues

-

When configuring Tetra File-Log Agent paths in the TDP user interface, there are three known issues:

- The End Date field can’t be the same value as the Start Date field. The UI indicates that the End Date can be “on or after the Start Date”.

- Path configuration options incorrectly appear to be enabled for Agents that have their queues disabled. Any configuration changes made through the TDP UI to an Agent that has its queue disabled won't be applied to the Agent.

A fix for these issues is in development and testing and is scheduled for a future release.

-

For new Tetra Agents set up through a Tetra Data Hub and a Generic Data Connector (GDC), Agent command queues aren’t enabled by default. However, the TDP UI still displays the command queue as enabled when it’s deactivated. As a workaround, customers can manually sync the Tetra Data Hub with the TDP. A fix for this issue is in development and testing and is scheduled for a future release.

Data Harmonization and Engineering Known Issues

- For customers using proxy servers to access the TDP, Tetraflow pipelines created in TDP v4.3.0 and earlier fail and return a

CalledProcessErrorerror. As a workaround, customers should disable any existing Tetraflow pipelines and then enable them again. A fix for this issue is in development and testing and is scheduled for a future release. - The legacy

ts-sdk putcommand to publish artifacts for Self-service pipelines (SSPs) returns a successful (0) status code, even if the command fails. As a workaround, customers should switch to using the latest TetraScience Command Line Interface (CLI) and run thets-cli publishcommand to publish artifacts instead. A fix for this issue is in development and testing and is scheduled for a futurets-sdkrelease. - IDS files larger than 2 GB are not indexed for search.

- The Chromeleon IDS (thermofisher_chromeleon) v6 Lakehouse tables aren't accessible through Snowflake Data Sharing. There are more subcolumns in the table’s

methodcolumn than Snowflake allows, so Snowflake doesn’t index the table. A fix for this issue is in development and testing and is scheduled for a future release. - Empty values in Amazon Athena SQL tables display as

NULLvalues in Lakehouse tables. - File statuses on the File Processing page can sometimes display differently than the statuses shown for the same files on the Pipelines page in the Bulk Processing Job Details dialog. For example, a file with an

Awaiting Processingstatus in the Bulk Processing Job Details dialog can also show aProcessingstatus on the File Processing page. This discrepancy occurs because each file can have different statuses for different backend services, which can then be surfaced in the TDP at different levels of granularity. A fix for this issue is in development and testing. - Logs don’t appear for pipeline workflows that are configured with retry settings until the workflows complete.

- Files with more than 20 associated documents (high-lineage files) do not have their lineage indexed by default. To identify and re-lineage-index any high-lineage files, customers must contact their CSM to run a separate reconciliation job that overrides the default lineage indexing limit.

- OpenSearch index mapping conflicts can occur when a client or private namespace creates a backwards-incompatible data type change. For example: If

doc.myFieldis a string in the common IDS and an object in the non-common IDS, then it will cause an index mapping conflict, because the common and non-common namespace documents are sharing an index. When these mapping conflicts occur, the files aren’t searchable through the TDP UI or API endpoints. As a workaround, customers can either create distinct, non-overlapping version numbers for their non-common IDSs or update the names of those IDSs. - File reprocessing jobs can sometimes show fewer scanned items than expected when either a health check or out-of-memory (OOM) error occurs, but not indicate any errors in the UI. These errors are still logged in Amazon CloudWatch Logs. A fix for this issue is in development and testing.

- File reprocessing jobs can sometimes incorrectly show that a job finished with failures when the job actually retried those failures and then successfully reprocessed them. A fix for this issue is in development and testing.

- File edit and update operations are not supported on metadata and label names (keys) that include special characters. Metadata, tag, and label values can include special characters, but it’s recommended that customers use the approved special characters only. For more information, see Attributes.

- The File Details page sometimes displays an Unknown status for workflows that are either in a Pending or Running status. Output files that are generated by intermediate files within a task script sometimes show an Unknown status, too.

- Some historical protocols and IDSs are not compatible with the new

ids-to-lakehousedata ingestion mechanism. The following protocols and IDSs are known to be incompatible withids-to-lakehousepipelines:- Protocol:

fcs-raw-to-ids< v1.5.1 (IDS:flow-cytometer< v4.0.0) - Protocol:

thermofisher-quantstudio-raw-to-ids< v5.0.0 (IDS: pcr-thermofisher-quantstudio < v5.0.0) - Protocol:

biotek-gen5-raw-to-idsv1.2.0 (IDS:plate-reader-biotek-gen5v1.0.1) - Protocol:

nanotemper-monolith-raw-to-idsv1.1.0 (IDS:mst-nanotemper-monolithv1.0.0) - Protocol:

ta-instruments-vti-raw-to-idsv2.0.0 (IDS:vapor-sorption-analyzer-tainstruments-vti-sav2.0.0)

- Protocol:

Data Access and Management Known Issues

Last updated: 6 January 2026

-

The default Search page that appears after customers sign in to the TDP loads slowly (30+ seconds) in environments with a large set of distinct label values. A fix for this issue is in development and testing and is scheduled for a future release. (Added on 6 January 2026)

-

Data Apps won’t launch in customer-hosted environments if the private subnets where the TDP is deployed are restricted and don’t have outbound access to the internet. As a workaround, customers should enable the following AWS Interface VPC endpoint in the VPC that the TDP uses:

com.amazonaws.<AWS REGION>.elasticfilesystemA fix for this issue is in development and testing and is scheduled for a future release.

-

Data Apps return CORS errors in all customer-hosted deployments. As a workaround, customers should create an AWS Systems Manager (SSM) parameter using the following pattern:

/tetrascience/production/ECS/ts-service-data-apps/DOMAINFor

DOMAIN, enter your TDP URL without thehttps://(for example,platform.tetrascience.com). A fix for this issue is in development and testing and is scheduled for a future release. -

The Data Lakehouse Architecture doesn't support restricted, customer-hosted environments that connect to the TDP through a proxy and have no connection to the internet. A fix for this issue is in development and testing and is scheduled for a future release.

-

On the File Details page, related files links don't work when accessed through the Show all X files within this workflow option. As a workaround, customers should select the Show All Related Files option instead. A fix for this issue is in development and testing and is scheduled for a future release.

-

When customers upload a new file on the Search page by using the Upload File button, the page doesn’t automatically update to include the new file in the search results. As a workaround, customers should refresh the Search page in their web browser after selecting the Upload File button. A fix for this issue is in development and testing and is scheduled for a future TDP release.

-

Values returned as empty strings when running SQL queries on SQL tables can sometimes return

Nullvalues when run on Lakehouse tables. As a workaround, customers taking part in the Data Lakehouse Architecture EAP should update any SQL queries that specifically look for empty strings to instead look for both empty string andNullvalues. -

The Tetra FlowJo Data App doesn’t load consistently in all customer environments.

-

Query DSL queries run on indices in an OpenSearch cluster can return partial search results if the query puts too much compute load on the system. This behavior occurs because the OpenSearch

search.default_allow_partial_resultsetting is configured astrueby default. To help avoid this issue, customers should use targeted search indexing best practices to reduce query compute loads. A way to improve visibility into when partial search results are returned is currently in development and testing and scheduled for a future TDP release. -

Text within the context of a RAW file that contains escape (

\) or other special characters may not always index completely in OpenSearch. A fix for this issue is in development and testing, and is scheduled for an upcoming release. -

If a data access rule is configured as [label] exists > OR > [same label] does not exist, then no file with the defined label is accessible to the Access Group. A fix for this issue is in development and testing and scheduled for a future TDP release.

-

File events aren’t created for temporary (TMP) files, so they’re not searchable. This behavior can also result in an Unknown state for Workflow and Pipeline views on the File Details page.

-

When customers search for labels in the TDP UI’s search bar that include either @ symbols or some unicode character combinations, not all results are always returned.

-

The File Details page displays a

404error if a file version doesn't comply with the configured Data Access Rules for the user.

TDP System Administration Known Issues

-

Failed files in the Data Lakehouse can’t be reprocessed through the Health Monitoring page. Instead, customers should monitor and reprocess failed Lakehouse files by using the Data Reconciliation, File Processing, or Workflow Processing pages.

-

The latest Connector versions incorrectly log the following errors in Amazon CloudWatch Logs:

Error loading organization certificates. Initialization will continue, but untrusted SSL connections will fail.Client is not initialized - certificate array will be empty

These organization certificate errors have no impact and shouldn’t be logged as errors. A fix for this issue is currently in development and testing, and is scheduled for an upcoming release. There is no workaround to prevent Connectors from producing these log messages. To filter out these errors when viewing logs, customers can apply the following CloudWatch Logs Insights query filters when querying log groups. (Issue #2818)

CloudWatch Logs Insights Query Example for Filtering Organization Certificate Errors

fields @timestamp, @message, @logStream, @log | filter message != 'Error loading organization certificates. Initialization will continue, but untrusted SSL connections will fail.' | filter message != 'Client is not initialized - certificate array will be empty' | sort @timestamp desc | limit 20 -

If a reconciliation job, bulk edit of labels job, or bulk pipeline processing job is canceled, then the job’s ToDo, Failed, and Completed counts can sometimes display incorrectly.

Upgrade Considerations

During the upgrade, there might be a brief downtime when users won't be able to access the TDP user interface and APIs.

After the upgrade, the TetraScience team verifies that the platform infrastructure is working as expected through a combination of manual and automated tests. If any failures are detected, the issues are immediately addressed, or the release can be rolled back. Customers can also verify that TDP search functionality continues to return expected results, and that their workflows continue to run as expected.

For more information about the release schedule, including the GxP release schedule and timelines, see the Product Release Schedule.

For more details on upgrade timing, customers should contact their CSM.

Important Data Lakehouse ConsiderationsKeep in mind the following:

- For customers using proxy servers to access the TDP, Tetraflow pipelines created before customers upgrade to TDP v4.3.1 or higher fail and return a

CalledProcessErrorerror. As a workaround, customers should disable any existing Tetraflow pipelines after upgrading to TDP v4.3.1 or higher and then enable them again.- Customers taking part in the EAP version of the Data Lakehouse will need to reprocess their existing Lakehouse data to use the new GA version of the Lakehouse tables. To backfill historical data into the updated Lakehouse tables, customers should create a Bulk Pipeline Process Job that uses an

ids-to-lakehousepipeline and is scoped in the Date Range field by the appropriate IDSs and historical time ranges.

SecurityTetraScience continually monitors and tests the TDP codebase to identify potential security issues. Various security updates are applied to the following areas on an ongoing basis:

- Operating systems

- Third-party libraries

Quality ManagementTetraScience is committed to creating quality software. Software is developed and tested following the ISO 9001-certified TetraScience Quality Management System. This system ensures the quality and reliability of TetraScience software while maintaining data integrity and confidentiality.

Other Release Notes

To view other TDP release notes, see Tetra Data Platform Release Notes.

Updated about 2 months ago